15 Common JSON Errors and How to Fix Them: Complete Debugging Guide

It’s 3 AM. Your API integration has been working fine for weeks. Suddenly, your application crashes with a cryptic error message: SyntaxError: Unexpected token } in JSON at position 247.

You stare at the JSON. It looks fine. You add a console.log. Still broken. You copy-paste it into a validator. Red underlines everywhere. But where’s the actual problem?

If you’ve been there, you know the frustration. JSON errors are deceptively simple—one missing comma can waste an hour of your time. The error messages often point to the wrong line. And sometimes, the JSON looks completely valid until you realize there’s an invisible character breaking everything.

This guide walks through the 15 most common JSON errors you’ll encounter, with real examples from production systems, actual error messages from different environments, and practical solutions that work. By the end, you’ll be able to spot and fix JSON issues in seconds instead of hours.

Why JSON Errors Are So Common (And So Frustrating)

JSON’s simplicity is both its strength and weakness. The entire specification fits on a single page. But that simplicity means strict rules—and strict rules mean any tiny mistake breaks everything.

The three reasons JSON errors are so common:

-

No forgiving syntax - Unlike JavaScript, JSON doesn’t allow trailing commas, comments, or single quotes. One tiny deviation and the parser fails.

-

Manual editing - Developers often hand-write JSON for configuration files or API testing. Unlike generated JSON from code, manual JSON is error-prone.

-

Copy-paste mistakes - Copying JSON from documentation, Stack Overflow, or colleagues often introduces invisible characters or encoding issues.

The result? Even experienced developers spend hours debugging JSON syntax errors. Let’s fix that.

Understanding JSON Error Messages

Before we dive into specific errors, let’s decode what those error messages actually mean.

Browser Console Errors

Chrome/Edge:

Uncaught SyntaxError: Unexpected token } in JSON at position 247

Firefox:

SyntaxError: JSON.parse: unexpected character at line 5 column 12 of the JSON data

Safari:

SyntaxError: JSON Parse error: Unexpected identifier "undefined"

Node.js Errors

SyntaxError: Unexpected token } in JSON at position 247

at JSON.parse (<anonymous>)

at Object.<anonymous> (/app/server.js:15:23)

What These Tell You

- “Unexpected token” - The parser found a character it didn’t expect

- “Position X” - Character count from the start (including whitespace)

- “Line X column Y” - Exact location in formatted JSON

- The actual problem - Often one or two characters BEFORE the reported position

Pro tip: The error position usually points to where the parser gave up, not where your mistake is. Always check the line BEFORE the error.

Error #1: Missing Comma Between Properties

Error messages you’ll see:

SyntaxError: Unexpected token '{' in JSONExpected ',' or '}'Unexpected string in JSON

This is the #1 most common JSON error. You forget a comma between object properties, and the parser chokes.

The Problem

{

"user": {

"id": 1001

"name": "Sarah Chen"

"email": "sarah@example.com"

}

}

Why it breaks: After parsing "id": 1001, the parser expects either:

- A comma (to continue with more properties)

- A closing brace

}(to end the object)

Instead, it finds "name", which is invalid syntax.

The Fix

{

"user": {

"id": 1001,

"name": "Sarah Chen",

"email": "sarah@example.com"

}

}

Add commas after every property except the last one.

Real-World Example

This happened to me during a midnight deployment. I was updating a configuration file:

{

"database": {

"host": "localhost",

"port": 5432,

"name": "production_db"

"ssl": true

}

}

The missing comma after "production_db" crashed the entire application on startup. The error message said “line 5” but the problem was line 4. Cost us 15 minutes of downtime.

How to Prevent This

1. Use a formatter as you type - Our JSON Formatter catches missing commas instantly

2. Format before saving - Always run your JSON through a validator before committing

3. Use syntax highlighting - Most editors highlight syntax errors in real-time

4. Count your commas - If you have N properties, you need N-1 commas

Pro tip: When adding a new property at the end of an object, add the comma to the previous line FIRST, then add your new property. This prevents forgetting the comma.

Error #2: Trailing Comma After Last Property

Error messages you’ll see:

SyntaxError: Unexpected token } in JSONTrailing comma in JSONExpected property name or '}'

This is the opposite of error #1. You add a comma after the last property, which JSON strictly forbids.

The Problem

{

"product": {

"id": "prod_123",

"name": "Wireless Mouse",

"price": 29.99,

}

}

Why it breaks: JSON was designed for machine parsing, not human editing. The comma signals “another property coming,” but then the object ends. The parser doesn’t know what to do.

Historical note: JavaScript allows trailing commas (since ES5), which is why developers constantly make this mistake in JSON. We’re trained to add trailing commas in JavaScript, but JSON is stricter.

The Fix

{

"product": {

"id": "prod_123",

"name": "Wireless Mouse",

"price": 29.99

}

}

Remove the comma after the last property.

The Array Version

Trailing commas are also invalid in arrays:

Wrong:

{

"tags": ["electronics", "accessories", "wireless",]

}

Correct:

{

"tags": ["electronics", "accessories", "wireless"]

}

Real-World Debugging Story

A developer on my team spent 2 hours debugging an API integration. The API was returning 400 Bad Request with “Invalid JSON.” The request looked perfect in Postman.

The problem? Postman’s “pretty” mode auto-fixed the trailing comma when displaying the request. But the actual request body sent to the server had the trailing comma. The server’s JSON parser rejected it.

Lesson: Never trust visual representations. Always check the raw request/response.

How to Prevent This

1. Use JSON.stringify() in code - When generating JSON programmatically, use the native serializer:

const data = {

product: {

id: "prod_123",

name: "Wireless Mouse",

price: 29.99, // Trailing comma OK in JavaScript

}

};

// JSON.stringify automatically removes trailing commas

const json = JSON.stringify(data, null, 2);

2. Configure your linter - ESLint can warn about trailing commas in JSON files:

{

"rules": {

"comma-dangle": ["error", "never"]

}

}

3. Use strict validators - Our Formatter tool highlights trailing commas immediately

Error #3: Using Single Quotes Instead of Double Quotes

Error messages you’ll see:

SyntaxError: Unexpected token ' in JSONUnexpected character in JSONInvalid or unexpected token

JSON requires double quotes for strings. Single quotes aren’t allowed anywhere.

The Problem

{

'user': {

'name': 'Alice Johnson',

'role': 'admin'

}

}

Why it breaks: The JSON specification explicitly requires double quotes (") for all strings—both keys and values. This differs from JavaScript, which allows single quotes, double quotes, and template literals.

The Fix

{

"user": {

"name": "Alice Johnson",

"role": "admin"

}

}

Replace all single quotes with double quotes.

Why This Rule Exists

Historical reason: JSON was derived from JavaScript’s object literal notation, but it was designed to be language-agnostic. Different languages have different string quote conventions:

- Python: Single and double quotes

- Ruby: Single and double quotes

- PHP: Single and double quotes (different behavior)

- Java: Only double quotes for strings

By requiring only double quotes, JSON eliminates ambiguity and works consistently across all languages.

The Nested Quote Problem

What if your string contains double quotes? You must escape them:

Wrong:

{

"message": "She said "Hello" to me"

}

Correct:

{

"message": "She said \"Hello\" to me"

}

Use backslash (\) to escape internal double quotes.

Practical Replacement Strategy

If you have JavaScript with single quotes and need to convert to JSON:

JavaScript:

const config = {

apiUrl: 'https://api.example.com',

apiKey: 'sk_test_abc123',

timeout: 5000

};

JSON:

{

"apiUrl": "https://api.example.com",

"apiKey": "sk_test_abc123",

"timeout": 5000

}

Find/Replace tip: In most editors:

- Find:

' - Replace:

" - Replace All

But be careful—this also replaces apostrophes inside strings. Better: use JSON.stringify().

How to Prevent This

1. Always use JSON.stringify() - When converting JavaScript objects to JSON:

const data = { name: 'Alice' }; // Single quotes OK here

const json = JSON.stringify(data); // Produces: {"name":"Alice"}

2. Copy from JSON sources - When copying examples, prefer sources that show proper JSON (API docs, JSON files) over JavaScript code

3. Use JSON mode in editors - Set your editor to “JSON” mode, not “JavaScript” mode. Most editors will then enforce double quotes.

Error #4: Unquoted Object Keys

Error messages you’ll see:

SyntaxError: Unexpected token in JSONExpected property nameUnexpected identifier

JavaScript allows unquoted object keys. JSON doesn’t.

The Problem

{

user: {

name: "Bob Smith",

age: 35,

active: true

}

}

Why it breaks: Every object key in JSON must be a string enclosed in double quotes. The parser sees user: and doesn’t know what to do with the bare word.

The Fix

{

"user": {

"name": "Bob Smith",

"age": 35,

"active": true

}

}

Add double quotes around all keys.

JavaScript vs JSON Confusion

This error happens because JavaScript is more forgiving:

Valid JavaScript:

const config = {

apiUrl: "https://api.example.com", // Quotes OK

timeout: 5000, // Unquoted OK

'max-connections': 100 // Quotes required for hyphens

};

Valid JSON:

{

"apiUrl": "https://api.example.com",

"timeout": 5000,

"max-connections": 100

}

In JSON, quotes are always required for keys, regardless of whether the key contains special characters.

Special Characters in Keys

If your key contains spaces, hyphens, or special characters, you need quotes in both JavaScript and JSON:

{

"first-name": "Alice",

"last name": "Johnson",

"email@domain": "alice@example.com",

"user:id": 1001

}

All of these are valid JSON (though some are poor naming choices).

How to Prevent This

1. Convert JavaScript objects properly:

// JavaScript object with unquoted keys

const data = {

name: "Alice",

age: 30

};

// Convert to JSON (quotes all keys automatically)

const json = JSON.stringify(data, null, 2);

console.log(json);

// Output:

// {

// "name": "Alice",

// "age": 30

// }

2. Copy-paste from JSON validators - After validating your JSON in our Formatter tool, copy the corrected version

3. Use JSON templates - Start with valid JSON templates rather than writing from scratch

Error #5: Comments in JSON

Error messages you’ll see:

SyntaxError: Unexpected token / in JSONUnexpected characterInvalid or unexpected token

JSON doesn’t support comments. Not single-line, not multi-line, not at all.

The Problem

{

// User configuration

"user": {

"name": "Charlie",

/* Role determines permissions */

"role": "editor"

}

}

Why it breaks: The JSON specification intentionally excludes comments. Douglas Crockford (JSON’s creator) explained: “I removed comments from JSON because I saw people were using them to hold parsing directives, a practice which would have destroyed interoperability.”

The Fix (Option 1): Remove Comments

{

"user": {

"name": "Charlie",

"role": "editor"

}

}

Simply delete the comments.

The Fix (Option 2): Use a Comment Field

{

"_comment": "User configuration - role determines permissions",

"user": {

"name": "Charlie",

"role": "editor"

}

}

Store comments as data using underscore-prefixed keys. Your application can ignore these fields.

The Fix (Option 3): Use a Different Format

If you need comments for configuration files, consider YAML instead:

# User configuration

user:

name: Charlie

# Role determines permissions

role: editor

YAML supports comments natively and is designed for human-edited configuration files.

Why No Comments Can Be Good

Advantages of JSON’s no-comment policy:

- Simpler parsers - No need to handle comment syntax

- Faster parsing - Skip comment detection logic

- No ambiguity - Can’t hide malicious code in comments

- Forces clarity - Data should be self-documenting through good naming

Real-World Workaround

Many development teams use this pattern:

config.json:

{

"database": {

"host": "localhost",

"port": 5432,

"connectionTimeout": 30000

}

}

config.md (documentation):

# Configuration Documentation

## Database Settings

- `connectionTimeout`: Set to 30000 (30 seconds) to handle slow network conditions

Increased from 15000 on 2025-01-15 due to production timeouts

Keep JSON clean and document separately.

JSON5 and JSONC: Alternatives with Comments

If you control both the producer and consumer of your JSON:

JSON5 (JavaScript extension):

{

// Comments allowed!

user: {

name: "Charlie",

role: "editor",

} // Trailing comma OK

}

JSONC (JSON with Comments - used by VS Code):

{

// User configuration

"user": {

"name": "Charlie",

"role": "editor"

}

}

Warning: These are non-standard. Only use if all your tools support them. APIs should always use standard JSON.

How to Prevent This

1. Use external documentation - Keep comments in separate .md files

2. Choose the right format - Configuration files? Use YAML. API data? Use JSON.

3. Self-documenting data - Use descriptive key names:

Instead of:

{

"t": 30000 // timeout in milliseconds

}

Use:

{

"connectionTimeoutMs": 30000

}

Error #6: Unterminated String Literal

Error messages you’ll see:

SyntaxError: Unterminated string literalUnexpected end of JSON inputUnexpected token ILLEGAL

This error occurs when you forget to close a string with a double quote.

The Problem

{

"message": "Welcome to our application,

"status": "active"

}

The string after "message": is never closed. The parser reads past the line break, then hits the next quote and gets confused.

The Fix

{

"message": "Welcome to our application",

"status": "active"

}

Close the string before the line break.

Multi-Line Strings: The Right Way

JSON doesn’t support multi-line strings directly. If you need a long string, you have options:

Option 1: One line with \n

{

"description": "This is line one.\nThis is line two.\nThis is line three."

}

Option 2: Concatenation in your code

const data = {

description:

"This is line one. " +

"This is line two. " +

"This is line three."

};

const json = JSON.stringify(data);

Option 3: Array of lines

{

"descriptionLines": [

"This is line one.",

"This is line two.",

"This is line three."

]

}

Join them in your application: descriptionLines.join('\n')

Special Characters That Break Strings

These characters must be escaped inside JSON strings:

{

"quote": "She said \"Hello\"",

"backslash": "Path: C:\\Users\\Documents",

"newline": "Line one\nLine two",

"tab": "Column1\tColumn2",

"carriageReturn": "Text\rOverwritten",

"backspace": "Text\b",

"formFeed": "Page\f",

"unicodeChar": "Heart: \u2764"

}

Common mistake: Windows file paths without escaped backslashes:

Wrong:

{

"path": "C:\Users\Documents\file.txt"

}

Correct:

{

"path": "C:\\Users\\Documents\\file.txt"

}

Or use forward slashes (works in Windows):

{

"path": "C:/Users/Documents/file.txt"

}

How to Prevent This

1. Use JSON.stringify() for user input:

const userMessage = 'She said "Hello" and left';

const data = { message: userMessage };

const json = JSON.stringify(data);

// Automatically escapes: {"message":"She said \"Hello\" and left"}

2. Validate before parsing - Run JSON through our Formatter tool to catch unterminated strings

3. Use template literals carefully:

// WRONG - Breaks JSON if name contains quotes

const json = `{"name": "${userName}"}`;

// RIGHT - Properly escape

const json = JSON.stringify({ name: userName });

Error #7: Invalid Number Formats

Error messages you’ll see:

SyntaxError: Unexpected token N in JSONUnexpected number in JSONInvalid or unexpected token

JavaScript has several number formats that aren’t valid in JSON.

The Problem

{

"infinity": Infinity,

"notANumber": NaN,

"undefined": undefined,

"hexadecimal": 0xFF,

"octal": 0o77,

"binary": 0b1010

}

None of these are valid JSON.

The Fix

{

"infinity": null,

"notANumber": null,

"undefined": null,

"hexadecimal": 255,

"octal": 63,

"binary": 10

}

Valid JSON number formats:

- Integers:

42,-17,0 - Decimals:

3.14,-0.001,2.0 - Scientific notation:

1.5e10,3.2e-5

Invalid JSON number formats:

Infinityand-InfinityNaN(Not a Number)- Hexadecimal (0x…)

- Octal (0o…)

- Binary (0b…)

- Leading zeros (except for 0 itself)

Handling Special Number Cases

Strategy 1: Use null

{

"calculatedValue": null,

"reason": "Division by zero resulted in Infinity"

}

Strategy 2: Use strings

{

"specialValue": "Infinity",

"processingNote": "Convert to number in application"

}

Strategy 3: Use alternative representations

{

"veryLargeNumber": "9007199254740992",

"note": "Exceeds JavaScript safe integer range"

}

Large Number Precision Issues

JavaScript’s Number type can’t safely represent integers larger than 2^53 - 1 (9,007,199,254,740,991).

Problem:

{

"userId": 9007199254740993

}

This number might lose precision when parsed.

Solution:

{

"userId": "9007199254740993"

}

Use strings for large IDs.

Real-world example: Twitter’s API returns tweet IDs as both numbers and strings:

{

"id": 1234567890123456768,

"id_str": "1234567890123456768"

}

The id_str field ensures precision across all platforms.

Leading Zeros

Invalid:

{

"zipCode": 02134

}

Leading zeros aren’t allowed (except for 0 itself).

Valid:

{

"zipCode": "02134"

}

Store as string if leading zeros matter.

How to Prevent This

1. Check for special values before serialization:

function safeStringify(obj) {

return JSON.stringify(obj, (key, value) => {

if (typeof value === 'number') {

if (!isFinite(value)) {

return null; // Convert Infinity/NaN to null

}

}

return value;

});

}

const data = {

result: 10 / 0, // Infinity

invalid: Math.sqrt(-1) // NaN

};

console.log(safeStringify(data));

// Output: {"result":null,"invalid":null}

2. Use string representation for edge cases:

const data = {

bigNumber: String(9007199254740993),

hexValue: parseInt('0xFF', 16)

};

3. Validate API responses - Use our Formatter tool to validate JSON before processing

Error #8: Duplicate Keys

Error messages you’ll see:

- (No error in most parsers!)

- Behavior: Last value wins

This is a silent error—most JSON parsers won’t complain, but your data won’t be what you expect.

The Problem

{

"user": {

"name": "Alice",

"email": "alice@example.com",

"role": "admin",

"email": "alice.new@example.com"

}

}

The email key appears twice. Most parsers will use the last occurrence.

Result after parsing:

{

user: {

name: "Alice",

email: "alice.new@example.com", // Second value wins

role: "admin"

}

}

Why This Is Dangerous

1. Silent data loss:

{

"settings": {

"timeout": 30,

"maxRetries": 5,

"timeout": 60

}

}

You think timeout is 30, but it’s actually 60.

2. Merge conflicts:

When merging JSON from different sources, duplicates can hide:

{

"config": {

"apiKey": "old_key_abc123",

"endpoint": "https://api.example.com",

"apiKey": "new_key_xyz789"

}

}

Which key is active? Depends on the parser.

3. Debugging nightmares:

You update a value but it doesn’t change. Why? Because there’s a duplicate further down that’s overriding it.

The Fix

Remove the duplicate key, keeping only one:

{

"user": {

"name": "Alice",

"email": "alice.new@example.com",

"role": "admin"

}

}

How Different Systems Handle Duplicates

JavaScript (JSON.parse):

const json = '{"name": "First", "name": "Second"}';

const obj = JSON.parse(json);

console.log(obj.name); // "Second" - last wins

Python (json.loads):

import json

data = json.loads('{"name": "First", "name": "Second"}')

print(data['name']) # "Second" - last wins

Some validators:

Strict validators may reject duplicate keys entirely.

Real-World Example

I once debugged a production issue where an API configuration had:

{

"rateLimits": {

"requestsPerMinute": 100,

"requestsPerHour": 6000,

"requestsPerMinute": 60

}

}

The application was rate-limiting at 60 requests/minute, not 100. Finding the duplicate took an hour because the file was 800 lines long.

How to Prevent This

1. Use validation tools - Our Formatter tool with strict mode highlights duplicates

2. Use linters - Configure JSON linters to reject duplicates:

// .eslintrc.json

{

"rules": {

"no-dupe-keys": "error"

}

}

3. Generate JSON programmatically:

When building JSON in code, JavaScript’s object literal syntax prevents duplicates:

const config = {

timeout: 30,

timeout: 60 // Syntax error in JavaScript!

};

4. Use version control diffs - Review JSON changes carefully in pull requests

5. Automated testing:

function hasDuplicateKeys(json) {

const keys = new Set();

const obj = JSON.parse(json);

function check(o) {

if (typeof o !== 'object' || o === null) return;

for (const key in o) {

if (keys.has(key)) {

throw new Error(`Duplicate key found: ${key}`);

}

keys.add(key);

check(o[key]);

}

}

check(obj);

}

Error #9: Invalid UTF-8 Characters

Error messages you’ll see:

SyntaxError: JSON.parse: bad control character in string literalInvalid characterUnexpected token

JSON must be encoded in UTF-8. Other encodings or invalid UTF-8 sequences cause parsing errors.

The Problem

Control characters (ASCII 0-31) aren’t allowed in JSON strings without escaping:

{

"message": "Line one[actual newline character here]Line two"

}

The actual newline character (ASCII 10) breaks parsing.

The Fix

Escape the control character:

{

"message": "Line one\nLine two"

}

Common Control Character Issues

Tab character:

// WRONG - actual tab character

{"data": "Column1 Column2"}

// CORRECT

{"data": "Column1\tColumn2"}

Carriage return:

// WRONG

{"text": "Windows line ending

"}

// CORRECT

{"text": "Windows line ending\r\n"}

Null byte:

// WRONG - null byte (ASCII 0)

{"data": "Text[null]More text"}

// CORRECT

{"data": "Text\u0000More text"}

Unicode Characters

JSON fully supports Unicode characters:

Valid:

{

"greeting": "Hello 世界",

"emoji": "👋🌍",

"symbol": "€",

"math": "∑ ∫ ∂"

}

Alternative (escaped):

{

"greeting": "Hello \u4e16\u754c",

"emoji": "\ud83d\udc4b\ud83c\udf0d"

}

Both are valid. Escaped form is safer for systems with encoding issues.

Byte Order Mark (BOM) Problems

Some text editors add a BOM (Byte Order Mark) at the start of UTF-8 files:

EF BB BF {"name": "Alice"}

JSON parsers reject the BOM, causing “Unexpected token” errors.

How to detect BOM:

# View file as hexadecimal

hexdump -C file.json | head -n 1

# Look for: ef bb bf at the start

How to remove BOM:

# Using dos2unix

dos2unix file.json

# Using sed

sed -i '1s/^\xEF\xBB\xBF//' file.json

# In VS Code: File → Preferences → Settings → "files.encoding" → "UTF-8"

Character Encoding Issues

Problem: File saved in wrong encoding (Windows-1252, ISO-8859-1, etc.)

Symptoms:

- Strange characters where accents should be

�replacement characters- Parsing failures

Solution:

- Check file encoding:

file -i data.json

# Output: data.json: application/json; charset=utf-8

- Convert to UTF-8:

iconv -f ISO-8859-1 -t UTF-8 input.json > output.json

- Set editor encoding:

- VS Code: Bottom right corner → Select encoding → “UTF-8”

- Sublime: File → Save with Encoding → UTF-8

How to Prevent This

1. Use JSON.stringify() - Automatically escapes control characters:

const data = {

message: "Line one\nLine two\tTabbed"

};

const json = JSON.stringify(data);

// Output: {"message":"Line one\\nLine two\\tTabbed"}

2. Configure editor encoding:

- Always save as UTF-8 without BOM

- Enable “Show whitespace” to spot tabs/newlines

3. Validate after editing - Use our Formatter tool to catch encoding issues

4. Use .editorconfig:

# .editorconfig

[*.json]

charset = utf-8

end_of_line = lf

insert_final_newline = true

trim_trailing_whitespace = true

Error #10: Wrong Data Type for Value

Error messages you’ll see:

- (Usually no parser error, but application logic fails)

- Type errors in application code

JSON parsers are lenient about types, but your application expects specific types.

The Problem

{

"user": {

"id": "1001",

"age": "25",

"active": "true",

"score": "95.5"

}

}

Everything is a string, but your code expects:

idas numberageas numberactiveas booleanscoreas number

Result: Application logic breaks:

const user = JSON.parse(jsonString);

if (user.active) { // String "true" is truthy!

console.log("User is active");

}

user.age += 1; // "25" + 1 = "251" (string concatenation)

The Fix

Use correct JSON data types:

{

"user": {

"id": 1001,

"age": 25,

"active": true,

"score": 95.5

}

}

JSON Data Types Reference

String:

{"name": "Alice", "empty": ""}

Must use double quotes. Empty string is valid.

Number:

{"count": 42, "price": 19.99, "negative": -17, "scientific": 1.5e10}

No quotes. Integers and decimals both use the number type.

Boolean:

{"active": true, "verified": false}

Lowercase only. No quotes.

Null:

{"middleName": null}

Represents absence of value. Lowercase only.

Array:

{"tags": ["red", "green", "blue"], "numbers": [1, 2, 3]}

Ordered list. Can contain mixed types.

Object:

{"user": {"name": "Alice", "age": 30}}

Nested structure. Keys must be strings.

Type Coercion Issues

String to number:

const data = JSON.parse('{"age": "25"}');

const age = Number(data.age); // Explicit conversion

// Or: const age = +data.age;

String to boolean:

const data = JSON.parse('{"active": "true"}');

// WRONG: if (data.active) - string "true" is truthy

// WRONG: if (Boolean(data.active)) - "false" is also truthy!

// RIGHT:

const active = data.active === "true";

// Or: const active = JSON.parse(data.active);

Number stored as string:

const data = JSON.parse('{"id": "1001"}');

// This might break database queries expecting a number

Real-World Example: API Type Mismatches

I worked on an integration where the API documentation said:

{

"userId": 12345,

"isActive": true

}

But the actual API returned:

{

"userId": "12345",

"isActive": "1"

}

Our application crashed because:

if (user.isActive) { // "1" is truthy

// This always executed, even for inactive users

}

Lesson: Always validate API responses. Never trust documentation alone.

How to Prevent This

1. Use JSON Schema validation:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"id": {"type": "number"},

"age": {"type": "number"},

"active": {"type": "boolean"},

"name": {"type": "string"}

},

"required": ["id", "name"]

}

2. Validate after parsing:

function validateUser(user) {

if (typeof user.id !== 'number') {

throw new Error('User ID must be a number');

}

if (typeof user.active !== 'boolean') {

throw new Error('Active status must be boolean');

}

return user;

}

const user = validateUser(JSON.parse(jsonString));

3. Use TypeScript:

interface User {

id: number;

age: number;

active: boolean;

name: string;

}

const user: User = JSON.parse(jsonString);

// TypeScript will catch type mismatches at compile time

4. Test with real data - Don’t just test with your own well-formed JSON. Test with actual API responses.

Error #11: Mixing Up Array and Object Syntax

Error messages you’ll see:

SyntaxError: Unexpected token , in JSONExpected property name or '}'

Arrays use square brackets []. Objects use curly braces {}. Mixing them breaks parsing.

The Problem

{

"users": {

{"id": 1, "name": "Alice"},

{"id": 2, "name": "Bob"}

}

}

This tries to use object syntax {} for what should be an array.

The Fix

{

"users": [

{"id": 1, "name": "Alice"},

{"id": 2, "name": "Bob"}

]

}

Use array syntax [] for lists of items.

Array Syntax Rules

Arrays:

- Use square brackets:

[...] - Contain comma-separated values

- Values can be any type (mixed types allowed)

- Order matters

{

"mixed": [1, "two", true, null, {"nested": "object"}, [1, 2, 3]]

}

Objects:

- Use curly braces:

{...} - Contain comma-separated key-value pairs

- Keys must be strings

- Order doesn’t matter (technically)

{

"user": {

"name": "Alice",

"age": 30,

"address": {

"city": "San Francisco"

}

}

}

Common Confusion: Single Item Arrays

Wrong assumption:

“I only have one item, so I don’t need an array.”

Wrong:

{

"users": {"id": 1, "name": "Alice"}

}

Right:

{

"users": [

{"id": 1, "name": "Alice"}

]

}

Keep array syntax even for single items. Your code expects an array:

const users = data.users;

users.forEach(user => { // This breaks if users is an object

console.log(user.name);

});

Empty Arrays vs Empty Objects

Both are valid but mean different things:

{

"emptyArray": [],

"emptyObject": {}

}

Empty array: No items in the list.

if (data.emptyArray.length === 0) { // true

console.log("No items");

}

Empty object: No properties defined.

if (Object.keys(data.emptyObject).length === 0) { // true

console.log("No properties");

}

How to Prevent This

1. Plan your structure first:

Before writing JSON, decide:

- Is this a list of items? → Use array

- Is this a single object with properties? → Use object

- Do I need to add more items later? → Probably an array

2. Be consistent:

{

"users": [

{"id": 1, "name": "Alice"}

],

"settings": {

"theme": "dark",

"notifications": true

}

}

Lists of similar items = arrays. Single entities = objects.

3. Use our Formatter tool to validate structure

Error #12: Incorrect Nesting and Brackets

Error messages you’ll see:

SyntaxError: Unexpected end of JSON inputExpected '}' or ','Unexpected token

Mismatched or missing brackets cause parsing failures.

The Problem

{

"config": {

"database": {

"host": "localhost",

"port": 5432

},

"cache": {

"enabled": true,

"ttl": 3600

}

}

}

Missing closing brace for config object.

The Fix

{

"config": {

"database": {

"host": "localhost",

"port": 5432

},

"cache": {

"enabled": true,

"ttl": 3600

}

}

}

Every opening brace/bracket needs a closing one.

Bracket Matching Rules

Objects: { must close with }

{

"key": "value"

}

Arrays: [ must close with ]

[

"item1",

"item2"

]

Nested structures:

{

"users": [

{

"name": "Alice",

"roles": ["admin", "editor"]

}

]

}

Count them:

- Opening

{: 2 - Closing

}: 2 ✓ - Opening

[: 2 - Closing

]: 2 ✓

Common Nesting Mistakes

Missing closing bracket:

{

"items": [

{"name": "Item 1"},

{"name": "Item 2"}

// Missing ]

}

Extra closing bracket:

{

"items": []

}} // Extra }

Wrong bracket type:

{

"items": [

{"name": "Item 1"}

} // Should be ]

}

Deep Nesting Issues

Problem: JSON with 10+ nesting levels is hard to debug:

{

"level1": {

"level2": {

"level3": {

"level4": {

"level5": {

"level6": {

"level7": {

"level8": {

"level9": {

"level10": "value"

}

}

}

}

}

}

}

}

}

}

Missing one } and you’ll spend 10 minutes finding it.

Solution: Flatten your structure:

{

"path": "level1.level2.level3",

"value": "data"

}

Or use IDs to reference relationships instead of deep nesting.

How to Prevent This

1. Use syntax highlighting:

Most editors color-match brackets. Click on a bracket to see its pair.

2. Format as you write:

Use our Formatter tool frequently. It will immediately show mismatched brackets.

3. Count brackets:

Before saving:

- Count opening braces:

{ - Count closing braces:

} - They must be equal

4. Use editor features:

- VS Code:

Ctrl+Shift+P→ “Format Document” - Auto-close brackets: Most editors auto-insert closing brackets

5. Build incrementally:

Don’t write 100 lines of JSON at once. Write a section, validate it, then add more.

Error #13: Using undefined Instead of null

Error messages you’ll see:

SyntaxError: Unexpected token u in JSONUnexpected identifier

JavaScript has undefined. JSON doesn’t.

The Problem

{

"name": "Alice",

"middleName": undefined,

"age": 30

}

undefined is a JavaScript concept, not a JSON data type.

The Fix (Option 1): Use null

{

"name": "Alice",

"middleName": null,

"age": 30

}

null represents the intentional absence of a value.

The Fix (Option 2): Omit the property

{

"name": "Alice",

"age": 30

}

If a value doesn’t exist, don’t include the property at all.

undefined vs null in JavaScript

JavaScript:

const user = {

name: "Alice",

middleName: undefined, // Not defined

lastName: null // Defined but no value

};

console.log(user.middleName); // undefined

console.log(user.lastName); // null

console.log(user.age); // undefined (doesn't exist)

JSON equivalents:

{

"name": "Alice",

"lastName": null

}

middleName and age are omitted entirely (equivalent to undefined).

When to Use null vs Omit

Use null when:

- The property is expected but has no value

- You want to explicitly indicate “no value”

- Your API schema requires the field

{

"user": {

"name": "Alice",

"profilePicture": null,

"bio": null

}

}

This says: “These fields exist but haven’t been set yet.”

Omit when:

- The property is optional

- You want to reduce JSON size

- The absence of the property is meaningful

{

"user": {

"name": "Alice"

}

}

This says: “These are the only fields that exist.”

JSON.stringify() Behavior

JSON.stringify() automatically handles undefined:

const data = {

name: "Alice",

age: undefined,

active: true

};

console.log(JSON.stringify(data));

// Output: {"name":"Alice","active":true}

// Note: age is omitted entirely

In arrays, undefined becomes null:

const data = {

values: [1, undefined, 3]

};

console.log(JSON.stringify(data));

// Output: {"values":[1,null,3]}

How to Prevent This

1. Use null explicitly:

const data = {

name: "Alice",

middleName: null // Explicit null, not undefined

};

2. Remove undefined properties:

function removeUndefined(obj) {

return JSON.parse(JSON.stringify(obj));

}

const clean = removeUndefined({

name: "Alice",

age: undefined

});

// Result: {name: "Alice"}

3. Use default values:

const data = {

name: "Alice",

middleName: user.middleName || null

};

Error #14: File Encoding Issues (UTF-16, UTF-8 with BOM)

Error messages you’ll see:

SyntaxError: Unexpected token ï in JSONInvalid characterUnexpected token ILLEGAL

Already partially covered in Error #9, but encoding deserves its own section.

The Problem

UTF-8 with BOM:

EF BB BF 7B 22 6E 61 6D 65 22 3A ...

(BOM) { " n a m e " : ...

UTF-16:

FF FE 7B 00 22 00 6E 00 61 00 6D 00 65 00 22 00 ...

(BOM) { " n a m e " ...

Both cause JSON.parse() to fail.

The Fix

1. Save as UTF-8 without BOM:

In VS Code:

- Click encoding in status bar (bottom right)

- Select “Save with Encoding”

- Choose “UTF-8” (not “UTF-8 with BOM”)

In Notepad++:

- Encoding → Convert to UTF-8 (without BOM)

2. Strip BOM programmatically:

function parseJSONWithBOM(text) {

// Remove BOM if present

if (text.charCodeAt(0) === 0xFEFF) {

text = text.slice(1);

}

return JSON.parse(text);

}

3. Convert encoding:

# Check current encoding

file -i data.json

# Convert UTF-16 to UTF-8

iconv -f UTF-16 -t UTF-8 input.json > output.json

# Remove BOM

sed -i '1s/^\xEF\xBB\xBF//' file.json

How to Detect Encoding Issues

Visual symptoms:

- Strange characters at file start: ``,

ÿþ - Garbled text:

Thi s i s a s tri ng(spaces between characters)

Command-line detection:

# View first few bytes as hex

hexdump -C file.json | head -n 1

# UTF-8 with BOM starts with: ef bb bf

# UTF-16 LE starts with: ff fe

# UTF-16 BE starts with: fe ff

In code:

const fs = require('fs');

const buffer = fs.readFileSync('data.json');

// Check for BOM

if (buffer[0] === 0xEF && buffer[1] === 0xBB && buffer[2] === 0xBF) {

console.log('UTF-8 with BOM detected');

}

Windows vs Unix Line Endings

While not an encoding issue per se, line ending differences cause problems:

Windows (CRLF): \r\n (bytes: 0D 0A)

Unix (LF): \n (byte: 0A)

JSON allows both, but mixing them in git causes issues.

Fix with .gitattributes:

*.json text eol=lf

Convert existing files:

# Windows to Unix

dos2unix file.json

# Unix to Windows

unix2dos file.json

How to Prevent This

1. Configure editor:

- VS Code:

"files.encoding": "utf8" - Sublime:

"default_encoding": "UTF-8"

2. Use .editorconfig:

[*.json]

charset = utf-8

end_of_line = lf

3. Git hooks:

Pre-commit hook to reject non-UTF-8 files:

#!/bin/bash

for file in $(git diff --cached --name-only --diff-filter=ACM | grep '.json$'); do

if file -i "$file" | grep -qv "charset=utf-8"; then

echo "Error: $file is not UTF-8 encoded"

exit 1

fi

done

Error #15: Maximum Nesting Depth Exceeded

Error messages you’ll see:

RangeError: Maximum call stack size exceededJSON recursion limit reached- Browser becomes unresponsive

This occurs with deeply nested or circular structures.

The Problem

Deeply nested JSON:

{

"a": {

"b": {

"c": {

"d": {

"e": {

"f": {

"g": {

"h": {

"i": {

"j": "too deep!"

}

}

}

}

}

}

}

}

}

}

Most JSON parsers have depth limits (typically 100-200 levels).

Circular references:

const obj = {name: "Alice"};

obj.self = obj; // Circular reference

JSON.stringify(obj);

// Error: Converting circular structure to JSON

The Fix for Deep Nesting

Flatten the structure:

Instead of:

{

"user": {

"profile": {

"contact": {

"address": {

"street": {

"name": "Main St"

}

}

}

}

}

}

Use:

{

"user_profile_contact_address_street_name": "Main St"

}

Or better:

{

"user": {

"street": "Main St",

"city": "San Francisco"

}

}

The Fix for Circular References

Option 1: Remove the circular reference:

const obj = {name: "Alice", friend: {name: "Bob"}};

obj.friend.friend = {name: "Alice"}; // Not circular - different object

JSON.stringify(obj); // Works

Option 2: Use a replacer function:

function stringifyWithCircular(obj) {

const seen = new WeakSet();

return JSON.stringify(obj, (key, value) => {

if (typeof value === "object" && value !== null) {

if (seen.has(value)) {

return "[Circular]";

}

seen.add(value);

}

return value;

});

}

const obj = {name: "Alice"};

obj.self = obj;

console.log(stringifyWithCircular(obj));

// Output: {"name":"Alice","self":"[Circular]"}

Option 3: Restructure your data:

Instead of circular references, use IDs:

// BAD: Circular reference

const user = {id: 1, name: "Alice"};

const friend = {id: 2, name: "Bob", friend: user};

user.friend = friend;

// GOOD: Use IDs

const users = [

{id: 1, name: "Alice", friendId: 2},

{id: 2, name: "Bob", friendId: 1}

];

Real-World Causes

1. Recursive data structures:

// Directory tree with parent references

const root = {name: "root", children: []};

const child = {name: "child", parent: root};

root.children.push(child);

// Can't serialize to JSON

2. ORM objects:

Sequelize, TypeORM, and other ORMs create circular references:

const user = await User.findOne({include: [Post]});

// user.posts[0].user === user (circular!)

JSON.stringify(user); // Error!

Fix: Use .toJSON() method or manually select fields:

const user = await User.findOne({

attributes: ['id', 'name'],

include: [{

model: Post,

attributes: ['id', 'title']

}]

});

3. Event emitters:

const EventEmitter = require('events');

const emitter = new EventEmitter();

emitter.on('test', () => {});

JSON.stringify(emitter); // Error - circular structure

How to Prevent This

1. Limit nesting in design:

Aim for 3-4 levels maximum:

{

"user": {

"profile": {

"name": "Alice"

}

}

}

2. Test circular reference detection:

function hasCircular(obj) {

try {

JSON.stringify(obj);

return false;

} catch (e) {

return e.message.includes('circular');

}

}

3. Use normalization:

Instead of nesting, use flat structure with references:

{

"users": {

"1": {"name": "Alice", "posts": [101, 102]},

"2": {"name": "Bob", "posts": [103]}

},

"posts": {

"101": {"title": "Post 1", "author": 1},

"102": {"title": "Post 2", "author": 1},

"103": {"title": "Post 3", "author": 2}

}

}

This is how Redux and similar state libraries structure data.

Debugging Tools and Techniques

Now that you know the errors, let’s talk about the best ways to find and fix them.

Browser Developer Tools

Chrome/Edge DevTools:

- Open DevTools (F12)

- Go to Console tab

- Error shows exact line/column:

Uncaught SyntaxError: Unexpected token } in JSON at position 247 - Click the error to see the JSON source

- Count to position 247 to find the error

Firefox Developer Tools:

Better error messages with line numbers:

SyntaxError: JSON.parse: unexpected character at line 5 column 12

Directly points to the problem location.

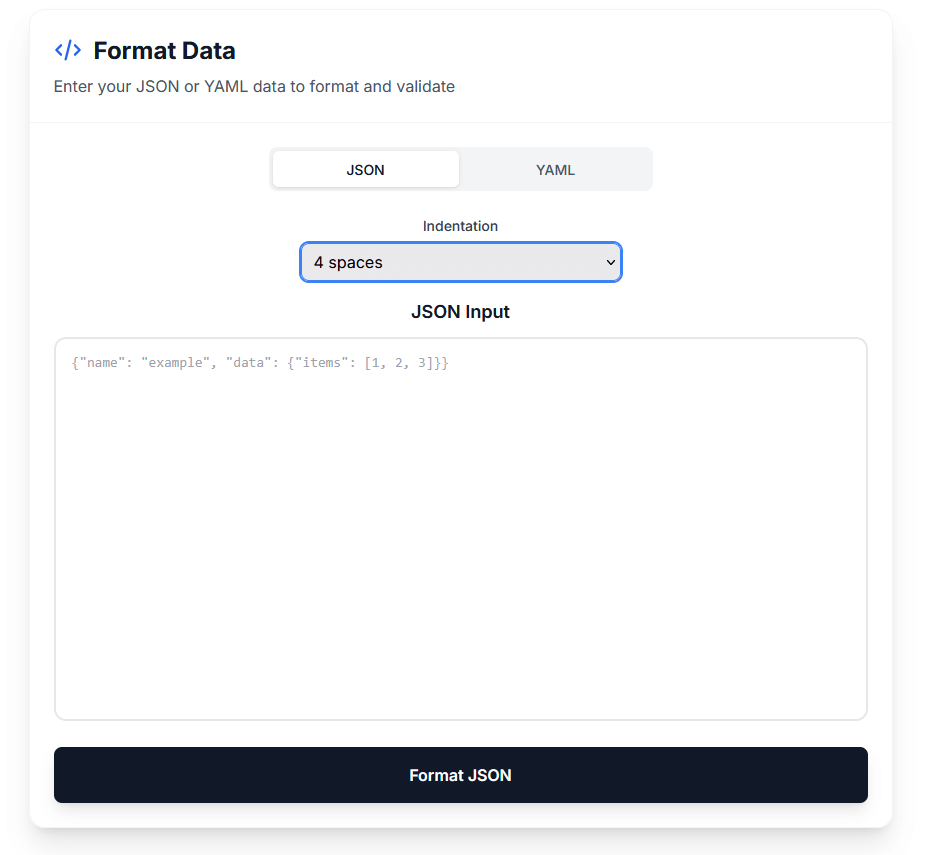

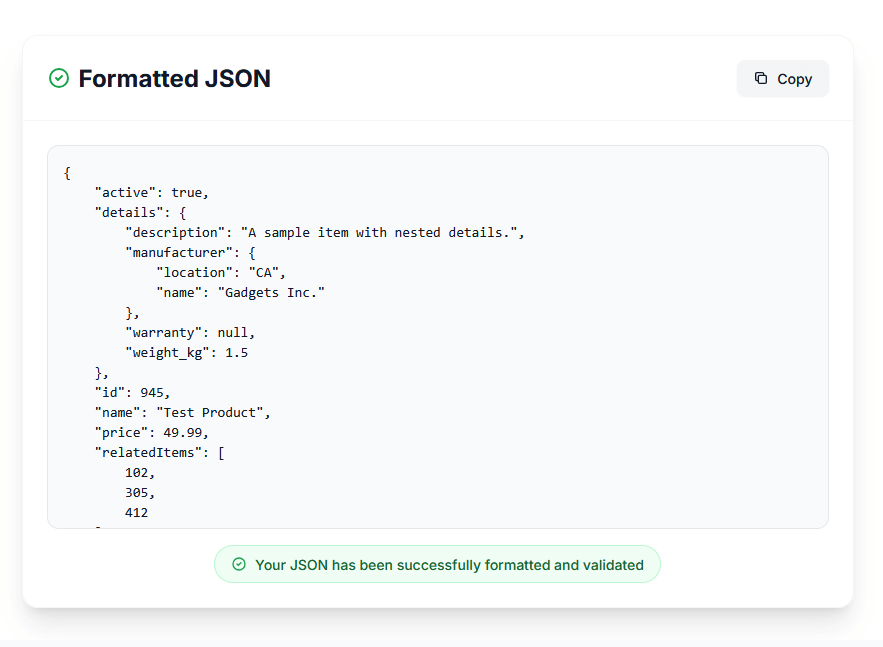

Online JSON Validators

Our JSON Formatter provides:

- Syntax highlighting

- Real-time error detection

- Line number references

- Suggested fixes

How to use:

- Paste your JSON

- Errors highlight in red

- Hover over errors for explanations

- Click “Format” to auto-fix some issues

Other useful tools on our site:

- Diff Tool - Compare two JSON files to find differences

- Encoder - Properly escape special characters

- Hash Generator - Verify JSON integrity with checksums

Command-Line Validation

Python:

# Validate JSON file

python -m json.tool data.json

# If valid, outputs formatted JSON

# If invalid, shows error with line number

Node.js:

# Validate and format

node -e "console.log(JSON.stringify(JSON.parse(require('fs').readFileSync('data.json')), null, 2))"

jq (JSON processor):

# Validate

jq empty data.json

# If valid, no output

# If invalid, shows error

Editor Extensions

VS Code:

- Built-in JSON validation

- Install “JSON Tools” extension for advanced features

- Enable “Format on Save” for automatic formatting

Sublime Text:

- Install “Pretty JSON” package

- Ctrl+Alt+J to format/validate

IntelliJ/WebStorm:

- Built-in JSON validation

- Right-click → “Reformat Code”

Automated Testing

Jest (JavaScript):

describe('JSON validation', () => {

it('should parse config file', () => {

const json = fs.readFileSync('config.json', 'utf8');

expect(() => JSON.parse(json)).not.toThrow();

});

it('should have required fields', () => {

const config = JSON.parse(fs.readFileSync('config.json'));

expect(config).toHaveProperty('database.host');

expect(config).toHaveProperty('database.port');

});

});

Schema validation:

const Ajv = require('ajv');

const ajv = new Ajv();

const schema = {

type: "object",

properties: {

name: {type: "string"},

age: {type: "number"}

},

required: ["name"]

};

const validate = ajv.compile(schema);

const valid = validate(data);

if (!valid) {

console.log(validate.errors);

}

Pre-commit Hooks

Prevent bad JSON from entering your repository:

package.json:

{

"husky": {

"hooks": {

"pre-commit": "npm run validate-json"

}

},

"scripts": {

"validate-json": "node scripts/validate-json.js"

}

}

scripts/validate-json.js:

const fs = require('fs');

const path = require('path');

const glob = require('glob');

const files = glob.sync('**/*.json', {

ignore: ['node_modules/**', 'dist/**']

});

let hasErrors = false;

files.forEach(file => {

try {

const content = fs.readFileSync(file, 'utf8');

JSON.parse(content);

console.log(`✓ ${file}`);

} catch (error) {

console.error(`✗ ${file}: ${error.message}`);

hasErrors = true;

}

});

if (hasErrors) {

process.exit(1);

}

Best Practices for Writing Error-Free JSON

Prevention is better than debugging. Follow these practices:

1. Always Use JSON.stringify() in Code

Don’t concatenate strings:

// BAD

const json = '{"name": "' + userName + '"}';

// GOOD

const json = JSON.stringify({name: userName});

2. Format Immediately

After writing JSON, format it immediately:

- Paste into our Formatter tool

- Click “Format”

- Copy the corrected version

This catches 90% of errors instantly.

3. Use Syntax Highlighting

Never edit JSON in plain text editors. Use:

- VS Code

- Sublime Text

- Any editor with JSON syntax highlighting

Colors help you spot errors visually.

4. Validate Before Deployment

Add JSON validation to your CI/CD pipeline:

# .github/workflows/validate.yml

name: Validate JSON

on: [push, pull_request]

jobs:

validate:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Validate JSON files

run: |

for file in $(find . -name "*.json"); do

python -m json.tool "$file" > /dev/null || exit 1

done

5. Use Linters and Formatters

ESLint for JSON:

{

"extends": ["plugin:json/recommended"],

"plugins": ["json"]

}

Prettier for auto-formatting:

{

"printWidth": 100,

"tabWidth": 2,

"trailingComma": "none"

}

6. Document Your JSON Structure

Keep a schema or example file:

users-schema.json:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"id": {"type": "number"},

"name": {"type": "string"},

"email": {"type": "string", "format": "email"},

"active": {"type": "boolean"}

},

"required": ["id", "name", "email"]

}

7. Review Changes Carefully

In pull requests, pay special attention to JSON changes:

- Check for trailing commas

- Verify quote types

- Look for duplicate keys

- Validate against schema

Use our Diff Tool to compare before/after versions.

8. Start Small and Build Up

Don’t write 500 lines of JSON at once:

- Write a small section

- Validate it

- Add the next section

- Validate again

This isolates errors to small chunks.

9. Keep Backups

Before making major JSON edits:

cp config.json config.json.backup

If something breaks, you can quickly revert.

10. Test with Real Data

Don’t just test with perfect example data. Test with:

- Empty values

- Special characters

- Unicode characters

- Very long strings

- Deeply nested structures

- Edge cases

Quick Reference: Error Checklist

When you get a JSON error, run through this checklist:

Commas:

- Comma after every property except the last?

- No trailing comma after final property?

- Commas between array items?

Quotes:

- All keys in double quotes?

- All string values in double quotes?

- No single quotes anywhere?

- Special characters escaped (\, ", \n)?

Brackets:

- Every

{has a matching}? - Every

[has a matching]? - Brackets correctly match (not

{...])?

Data Types:

- Numbers unquoted?

- Booleans are

trueorfalse(lowercase)? - Null is

null(lowercase)? - No

undefinedvalues?

Structure:

- Objects use

{}syntax? - Arrays use

[]syntax? - No duplicate keys?

Encoding:

- File saved as UTF-8?

- No BOM at file start?

- Control characters escaped?

Advanced:

- Nesting depth reasonable (<10 levels)?

- No circular references?

- Valid JSON file extension (.json)?

Conclusion: Becoming a JSON Debugging Expert

JSON errors are frustrating, but they follow predictable patterns. After working through this guide, you now know:

- The 15 most common JSON errors and their fixes

- How to read and understand error messages

- Which tools to use for validation and debugging

- Best practices to prevent errors before they happen

Key takeaways:

- Most errors are simple - Missing commas, wrong quotes, mismatched brackets

- Tools are your friend - Use our JSON Formatter to catch errors instantly

- Prevention beats debugging - Use

JSON.stringify(), validators, and linters - Error messages lie - The reported position is often after the actual error

- Practice makes perfect - The more JSON you work with, the faster you’ll spot errors

Next time you see a JSON error:

- Don’t panic

- Check the error position (and the line before it)

- Run through the checklist above

- Use our Formatter tool to identify the issue

- Fix it and validate

Remember: Even experienced developers make these mistakes. The difference is they have tools and techniques to fix them quickly. Now you do too.

Essential Developer Tools

Make JSON work easier with our suite of tools:

JSON & Data Tools:

- JSON Formatter - Format, validate, and beautify JSON instantly

- Diff Tool - Compare JSON files and spot differences

- Converter - Convert between JSON, XML, and other formats

- Hash Generator - Create checksums for data integrity

Encoding & Security:

- Encoder/Decoder - Encode JSON for URLs, Base64, and more

- JWT Decoder - Decode and inspect JSON Web Tokens

- Encryption Tool - Encrypt sensitive JSON data

- Checksum Calculator - Verify file integrity

Development Utilities:

- UUID Generator - Generate unique identifiers

- Random String Generator - Create secure random data

- Timestamp Converter - Work with Unix timestamps

- String Case Converter - Convert between naming conventions

Text Processing:

- Word Counter - Analyze JSON text content

- Duplicate Remover - Clean up duplicate entries

- Whitespace Remover - Minify JSON by removing whitespace

- Markdown Previewer - Preview JSON documentation

Web Development:

- User Agent Parser - Parse browser user agents

- HTTP Status Checker - Understand HTTP errors

- HTTP Headers Checker - Inspect HTTP request/response headers

- SSL Certificate Checker - Verify SSL certificates

View all tools: Developer Tools

Need help with a specific JSON error? Contact us or follow us on Twitter for more debugging tips.

Found this guide helpful? Bookmark it and share with your team!

Last Updated: October 29, 2025

Reading Time: 39 minutes

Author: Orbit2x Team