How to Debug Invalid JSON: 15 Common Errors & Fixes

You’ve copied JSON from an API. You pasted it into your code. You hit run. And then you see it:

SyntaxError: Unexpected token in JSON at position 47

Or worse: JSON.parse: unexpected character at line 1 column 23

Your JSON looks fine. You’ve stared at it for 10 minutes. The curly braces match. The quotes are there. But it still won’t parse.

Welcome to JSON debugging hell.

The problem with JSON errors is they’re simultaneously common and cryptic. Parsers tell you where the error is, but not what caused it. A missing comma on line 5 might throw an error on line 12. A trailing comma breaks everything silently.

This guide is different. You’ll learn the 15 most common JSON errors, how to identify them instantly, and how to fix them permanently. No more guessing. No more trial-and-error. Just clear diagnosis and immediate solutions.

By the end, you’ll understand why single quotes break JSON, how invisible characters sabotage parsing, and which “valid JavaScript” patterns are forbidden in JSON. You’ll never waste 30 minutes hunting for a missing bracket again.

Quick Answer: Why Does My JSON Keep Breaking?

Don’t have time to read 5,000 words? Here’s what you need to know:

- What JSON is: A strict data format that looks like JavaScript but isn’t JavaScript

- Why it breaks: JSON allows only double quotes, no trailing commas, no comments, and limited data types

- Most common cause: Single quotes instead of double quotes (accounts for 40% of errors)

- Second most common: Trailing commas at end of arrays or objects

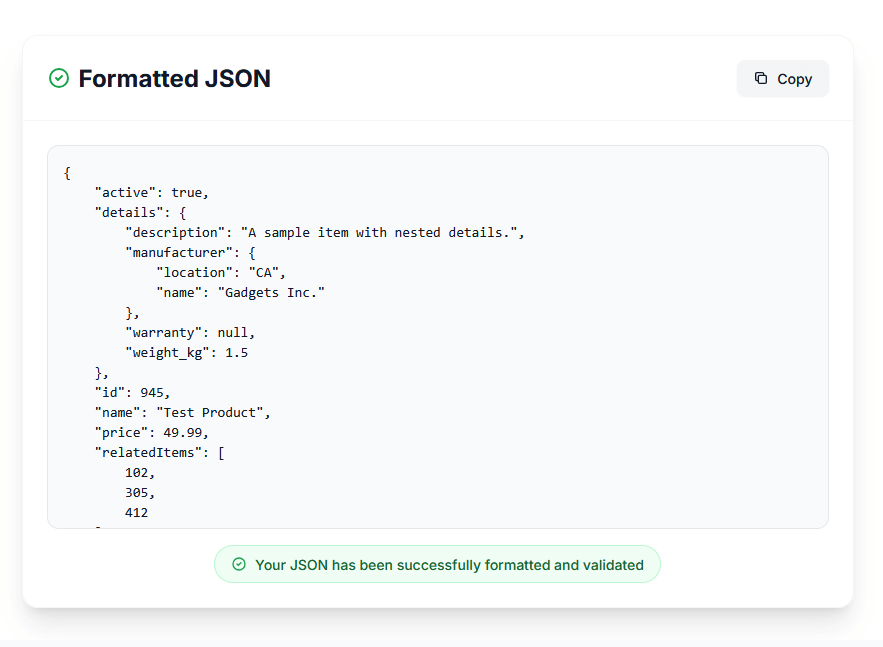

- Quick fix: Use our JSON Formatter tool to instantly validate and fix syntax errors

- Prevention: Always validate JSON before deploying to production

Still here? Great. Let’s debug every JSON error you’ll ever encounter.

What is JSON and Why is it So Strict?

JSON (JavaScript Object Notation) is a text-based data format for transmitting structured information between systems. Despite the name, JSON is NOT JavaScript—it’s a strict subset with its own rules.

Why JSON is More Restrictive Than JavaScript

JavaScript is forgiving. JSON is not. Here’s why:

JavaScript object (valid):

{

name: 'John', // Single quotes OK

age: 30, // No quotes on keys OK

hobbies: ['reading', 'gaming',], // Trailing comma OK

// This is a comment

}

JSON (INVALID - multiple errors):

{

name: 'John', // ❌ Single quotes forbidden

age: 30, // ❌ Trailing comma forbidden

// This is a comment // ❌ Comments forbidden

}

Correct JSON:

{

"name": "John",

"age": 30

}

The 7 Golden Rules of Valid JSON

- All strings MUST use double quotes (

"text", never'text') - All object keys MUST be quoted strings (

"key": value, neverkey: value) - No trailing commas after the last property or array item

- No comments allowed (no

//or/* */) - Only 6 data types allowed: string, number, object, array, boolean, null

- Numbers must be decimal (no

NaN,Infinity,0xhex, or0ooctal) - Proper character escaping for quotes, newlines, and special characters

Break any of these rules? Your JSON won’t parse.

Tools: Validate your JSON instantly with our JSON Formatter and compare formats with JSON vs XML vs YAML.

Error #1: Unexpected Token Errors

Error message:

SyntaxError: Unexpected token < in JSON at position 0

SyntaxError: Unexpected token u in JSON at position 0

Unexpected token o in JSON at position 1

What Causes It

This error means the JSON parser encountered a character it didn’t expect at a specific position. Common causes:

- HTML returned instead of JSON (API error pages)

- The word “undefined” or “null” without quotes

- Single word responses not wrapped in quotes

- JavaScript code mixed with JSON

Visual Example

Before (Broken):

<!DOCTYPE html>

<html>

<head><title>404 Not Found</title></head>

This happens when your API endpoint returns an HTML error page instead of JSON.

Before (Broken):

{

"status": undefined,

"data": null

}

After (Fixed):

{

"status": null,

"data": null

}

How to Fix It

Fix 1: Verify you’re receiving JSON, not HTML

fetch('/api/data')

.then(response => {

const contentType = response.headers.get('content-type');

if (!contentType || !contentType.includes('application/json')) {

throw new TypeError("Oops, we didn't get JSON!");

}

return response.json();

})

.catch(error => console.error('Error:', error));

Fix 2: Check the actual response

// Log the raw response before parsing

fetch('/api/data')

.then(response => response.text()) // Get as text first

.then(text => {

console.log('Raw response:', text); // See what you actually got

return JSON.parse(text); // Then try to parse

});

Fix 3: Replace undefined with null

// ❌ WRONG - undefined is not valid JSON

const data = { status: undefined };

// ✅ CORRECT - use null instead

const data = { status: null };

const json = JSON.stringify(data); // Works!

How to Prevent It

- Always check Content-Type headers before parsing responses

- Use try-catch blocks around JSON.parse() calls

- Log raw responses during development to see what you’re actually receiving

- Never use undefined in JSON—use null or omit the property

- Test API endpoints with tools like our HTTP Headers Checker

Tools: Quickly identify unexpected tokens with our JSON Formatter which highlights syntax errors.

Error #2: Unterminated String Literal

Error message:

SyntaxError: Unterminated string literal

JSON.parse: unterminated string literal

Unexpected end of JSON input

What Causes It

You started a string with a quote but never closed it. The parser reads to the end of the file looking for the closing quote.

Visual Example

Before (Broken):

{

"name": "John Doe,

"email": "john@example.com"

}

Missing closing quote after “John Doe”.

After (Fixed):

{

"name": "John Doe",

"email": "john@example.com"

}

Before (Broken - unescaped quote inside string):

{

"message": "He said "hello" to me"

}

After (Fixed):

{

"message": "He said \"hello\" to me"

}

How to Fix It

Fix 1: Find and close the unterminated string

// Visual inspection using a tool

// Paste your JSON into our formatter: /formatter

// It will highlight the unclosed string

Fix 2: Escape quotes inside strings

{

"quote": "She said, \"JSON is strict!\"",

"title": "The \"Ultimate\" Guide"

}

Fix 3: Use single quotes inside the string (only inside, not for wrapping)

{

"message": "He said 'hello' to me"

}

How to Prevent It

- Use a JSON-aware editor with syntax highlighting (VS Code, Sublime Text)

- Count your quotes as you write—every opening quote needs a closing one

- Use template literals carefully when building JSON strings:

// ❌ DANGEROUS - easy to create invalid JSON

const json = `{"name": "${userName}"}`; // If userName has quotes, breaks!

// ✅ SAFE - use JSON.stringify()

const json = JSON.stringify({ name: userName }); // Handles escaping automatically

- Validate early with our JSON Formatter before sharing or deploying

Error #3: Missing Comma Between Properties

Error message:

SyntaxError: Expected ',' or '}' after property value in JSON

Unexpected token { in JSON at position 45

What Causes It

You forgot the comma between two properties in an object or two items in an array.

Visual Example

Before (Broken):

{

"firstName": "John"

"lastName": "Doe"

"age": 30

}

After (Fixed):

{

"firstName": "John",

"lastName": "Doe",

"age": 30

}

Before (Broken - missing comma in array):

{

"colors": [

"red"

"blue"

"green"

]

}

After (Fixed):

{

"colors": [

"red",

"blue",

"green"

]

}

How to Fix It

Fix 1: Add commas after each property (except the last)

{

"property1": "value1", ← comma here

"property2": "value2", ← comma here

"property3": "value3" ← NO comma on last item

}

Fix 2: Use automatic formatting

// Let JSON.stringify() format it correctly

const obj = {

firstName: "John",

lastName: "Doe",

age: 30

};

const correctJson = JSON.stringify(obj, null, 2);

console.log(correctJson);

Output:

{

"firstName": "John",

"lastName": "Doe",

"age": 30

}

Fix 3: Use our JSON Formatter

Paste your broken JSON into our JSON Formatter tool, and it will:

- Identify missing commas

- Show you exactly where they should be

- Auto-fix the formatting

How to Prevent It

- Use JSON.stringify() instead of manually writing JSON strings

- Enable auto-format on save in your code editor

- Use linters like ESLint with JSON validation rules

- Copy-paste carefully when extracting data from multiple sources

Error #4: Trailing Comma (The Silent Killer)

Error message:

SyntaxError: Unexpected token } in JSON at position 78

Trailing comma in JSON

What Causes It

A comma after the last item in an object or array. JavaScript allows this (ES5+), but JSON does not.

Visual Example

Before (Broken):

{

"name": "John",

"age": 30, ← This trailing comma breaks JSON

}

After (Fixed):

{

"name": "John",

"age": 30

}

Before (Broken - trailing comma in array):

{

"hobbies": [

"reading",

"gaming",

"coding", ← Trailing comma

]

}

After (Fixed):

{

"hobbies": [

"reading",

"gaming",

"coding"

]

}

Why This Error is Tricky

Trailing commas are VALID in JavaScript:

const obj = {

name: "John",

age: 30, // ✅ JavaScript is fine with this

};

But INVALID in JSON:

{

"name": "John",

"age": 30, ← ❌ JSON parsing fails

}

This creates a false sense of security. Your IDE might not flag it. Your JavaScript might work. But the moment you JSON.parse() a string with trailing commas, it fails.

How to Fix It

Fix 1: Remove trailing commas manually

{

"users": [

{"id": 1, "name": "Alice"},

{"id": 2, "name": "Bob"} ← No comma here

],

"total": 2 ← No comma here

}

Fix 2: Use regex to find trailing commas

function findTrailingCommas(jsonString) {

// Find commas before } or ]

const pattern = /,(\s*[}\]])/g;

return jsonString.match(pattern);

}

const json = '{"name": "John", "age": 30,}';

console.log(findTrailingCommas(json)); // [",}"]

Fix 3: Use our JSON Formatter

Our JSON Formatter automatically detects and removes trailing commas.

How to Prevent It

- Use JSON.stringify() which never adds trailing commas

- Configure your editor to highlight trailing commas in JSON files

- Use ESLint with the

comma-danglerule set to"never"for JSON - Set up pre-commit hooks to validate JSON files

// .eslintrc.js

module.exports = {

overrides: [

{

files: ["*.json"],

rules: {

"comma-dangle": ["error", "never"]

}

}

]

};

Error #5: Single Quotes Instead of Double Quotes

Error message:

SyntaxError: Unexpected token ' in JSON at position 8

Invalid property id in JSON

What Causes It

Using single quotes (') instead of double quotes ("). JSON specification requires double quotes for all strings and keys.

This is the #1 most common JSON error, accounting for nearly 40% of all JSON parsing failures.

Visual Example

Before (Broken):

{

'name': 'John Doe',

'email': 'john@example.com',

'age': 30

}

After (Fixed):

{

"name": "John Doe",

"email": "john@example.com",

"age": 30

}

Before (Broken - mixed quotes):

{

"name": 'John Doe',

'age': 30

}

After (Fixed):

{

"name": "John Doe",

"age": 30

}

Why This Happens So Often

Reason 1: JavaScript accepts both

// All valid JavaScript

const obj1 = { name: "John" }; // Double quotes

const obj2 = { name: 'John' }; // Single quotes

const obj3 = { name: `John` }; // Template literals

// Only the first is valid JSON

Reason 2: Copy-pasting from JavaScript code

// JavaScript object (NOT JSON)

const config = {

api: 'https://api.example.com',

timeout: 5000

};

// If you copy this to a .json file, it will break

Reason 3: Text editor auto-quoting

Some editors automatically insert single quotes when you type quotes, which breaks JSON.

How to Fix It

Fix 1: Find and replace all single quotes

function fixQuotes(jsonString) {

// Replace single quotes with double quotes

// Be careful with quotes inside strings!

return jsonString.replace(/'/g, '"');

}

const broken = "{'name': 'John'}";

const fixed = fixQuotes(broken); // {"name": "John"}

⚠️ Warning: Simple find-replace can break if you have apostrophes in your data:

// This would break with simple replace:

{"message": "It's a beautiful day"}

Fix 2: Use JSON.stringify() for guaranteed correctness

// ✅ Always produces valid JSON with double quotes

const data = {

name: 'John', // Single quotes in JS are fine

message: "It's working"

};

const json = JSON.stringify(data);

// Output: {"name":"John","message":"It's working"}

Fix 3: Use our JSON Formatter

Paste your single-quoted JSON into our JSON Formatter:

- It detects single quote usage

- Converts them to double quotes safely

- Handles edge cases like apostrophes correctly

How to Prevent It

- Always use JSON.stringify() instead of manually creating JSON strings

- Configure your editor to use double quotes for JSON files:

// VS Code settings.json

{

"[json]": {

"editor.defaultFormatter": "esbenp.prettier-vscode",

"editor.formatOnSave": true

},

"prettier.singleQuote": false

}

- Use template literals carefully:

// ❌ WRONG - creates invalid JSON with single quotes

const json = `{'name': '${name}'}`;

// ✅ CORRECT - use JSON.stringify()

const json = JSON.stringify({ name: name });

- Validate before deploying with our JSON Formatter

Error #6: Unescaped Characters in Strings

Error message:

SyntaxError: Unexpected token in JSON at position 34

Invalid escape sequence in JSON

What Causes It

Special characters like quotes, backslashes, newlines, and tabs must be escaped in JSON strings. If they’re not, the parser breaks.

Characters That MUST Be Escaped

| Character | Escape Sequence | Example |

|---|---|---|

| Double quote | \" |

"He said \"hi\"" |

| Backslash | \\ |

"Path: C:\\Users" |

| Forward slash | \/ (optional) |

"URL: https:\/\/example.com" |

| Newline | \n |

"Line 1\nLine 2" |

| Tab | \t |

"Col1\tCol2" |

| Carriage return | \r |

"Text\r\n" |

| Backspace | \b |

"Text\bspace" |

| Form feed | \f |

"Page\fbreak" |

Visual Example

Before (Broken - unescaped quote):

{

"message": "She said "Hello" to me"

}

After (Fixed):

{

"message": "She said \"Hello\" to me"

}

Before (Broken - unescaped backslash):

{

"path": "C:\Users\John\Documents"

}

After (Fixed):

{

"path": "C:\\Users\\John\\Documents"

}

Before (Broken - literal newline):

{

"description": "This is line 1

This is line 2"

}

After (Fixed):

{

"description": "This is line 1\nThis is line 2"

}

How to Fix It

Fix 1: Escape special characters manually

{

"quote": "\"To be or not to be\" - Shakespeare",

"path": "C:\\Program Files\\App",

"multiline": "First line\nSecond line\nThird line",

"tabbed": "Name:\tJohn\nAge:\t30"

}

Fix 2: Use JSON.stringify() to handle escaping automatically

const data = {

message: 'She said "Hello" to me',

path: 'C:\\Users\\John',

multiline: `Line 1

Line 2

Line 3`

};

const json = JSON.stringify(data, null, 2);

console.log(json);

Output:

{

"message": "She said \"Hello\" to me",

"path": "C:\\Users\\John",

"multiline": "Line 1\nLine 2\nLine 3"

}

Fix 3: Use a dedicated escaping function

function escapeJsonString(str) {

return str

.replace(/\\/g, '\\\\') // Backslash

.replace(/"/g, '\\"') // Quote

.replace(/\n/g, '\\n') // Newline

.replace(/\r/g, '\\r') // Carriage return

.replace(/\t/g, '\\t') // Tab

.replace(/\b/g, '\\b') // Backspace

.replace(/\f/g, '\\f'); // Form feed

}

const text = 'Path: C:\\Users\nQuote: "Hello"';

const escaped = escapeJsonString(text);

const json = `{"value": "${escaped}"}`;

How to Prevent It

- Never manually build JSON strings with concatenation

- Always use JSON.stringify() which handles all escaping automatically

- Use raw strings (r-strings) in Python when building paths:

import json

# ❌ WRONG - backslashes need escaping

data = {"path": "C:\Users\John"} # Breaks!

# ✅ CORRECT - use raw string or double backslash

data = {"path": r"C:\Users\John"} # Works

data = {"path": "C:\\Users\\John"} # Also works

json.dumps(data) # Handles escaping automatically

- Test with our JSON Formatter to catch escaping issues

- Use multiline strings carefully:

// ❌ WRONG - literal newlines break JSON

const json = `{

"message": "Line 1

Line 2"

}`;

// ✅ CORRECT - use escape sequences

const json = JSON.stringify({

message: "Line 1\nLine 2"

});

Error #7: Invalid Number Format

Error message:

SyntaxError: Unexpected number in JSON at position 23

Invalid number format

What Causes It

JSON has strict rules for numbers:

- No leading zeros (except for

0.xdecimals) - No hexadecimal (

0x1F) - No octal (

0o17) - No binary (

0b1010) - No trailing decimal point (

10.) - No leading decimal point (

.5) - No plus sign prefix (

+10)

Visual Example

Before (Broken):

{

"price": 010.50, ← Leading zero

"quantity": 0x1F, ← Hexadecimal

"discount": .5, ← Missing leading zero

"total": 100., ← Trailing decimal

"bonus": +10 ← Plus sign

}

After (Fixed):

{

"price": 10.50,

"quantity": 31,

"discount": 0.5,

"total": 100.0,

"bonus": 10

}

Before (Broken - special number values):

{

"infinity": Infinity,

"notANumber": NaN,

"negInfinity": -Infinity

}

After (Fixed - use null or strings):

{

"infinity": null,

"notANumber": null,

"negInfinity": null

}

Or if you need to preserve the values:

{

"infinity": "Infinity",

"notANumber": "NaN",

"negInfinity": "-Infinity"

}

Valid Number Formats in JSON

✅ VALID:

{

"integer": 42,

"negative": -17,

"decimal": 3.14159,

"scientific": 6.022e23,

"negativeScientific": -1.6e-19,

"zero": 0,

"decimalZero": 0.5

}

❌ INVALID:

{

"leadingZero": 007, // Octal notation

"hex": 0xFF, // Hexadecimal

"binary": 0b1010, // Binary

"trailingDecimal": 10., // Must be 10.0 or 10

"leadingDecimal": .5, // Must be 0.5

"plusSign": +42, // No plus sign

"infinity": Infinity, // Not allowed

"nan": NaN // Not allowed

}

How to Fix It

Fix 1: Convert special values to null or strings

function jsonSafeNumber(value) {

if (!isFinite(value)) {

return null; // or return "Infinity"/"NaN" as string

}

return value;

}

const data = {

result: jsonSafeNumber(10 / 0), // null instead of Infinity

calculation: jsonSafeNumber(Math.sqrt(-1)) // null instead of NaN

};

const json = JSON.stringify(data);

// {"result":null,"calculation":null}

Fix 2: Use JSON.stringify() with a replacer function

const data = {

value1: Infinity,

value2: NaN,

value3: -Infinity,

value4: 42

};

const json = JSON.stringify(data, (key, value) => {

if (typeof value === 'number') {

if (!isFinite(value)) {

return null; // Replace Infinity/NaN with null

}

}

return value;

});

console.log(json);

// {"value1":null,"value2":null,"value3":null,"value4":42}

Fix 3: Remove leading zeros and fix formatting

function fixNumberFormat(jsonString) {

return jsonString

.replace(/:\s*0+(\d+)/g, ': $1') // Remove leading zeros

.replace(/:\s*(\d+)\./g, ': $1.0') // Fix trailing decimal

.replace(/:\s*\.(\d+)/g, ': 0.$1'); // Fix leading decimal

}

How to Prevent It

- Let JavaScript handle number serialization via JSON.stringify()

- Validate number values before serialization:

function validateJsonNumber(num) {

if (typeof num !== 'number') return false;

if (!isFinite(num)) return false; // Rejects Infinity and NaN

return true;

}

// Use before adding to JSON

if (validateJsonNumber(myValue)) {

data.value = myValue;

} else {

data.value = null;

}

- Use type checking in TypeScript to catch issues at compile time:

interface ApiResponse {

count: number;

total: number;

}

// TypeScript won't let you assign NaN or Infinity to these

const response: ApiResponse = {

count: 10,

total: 100

};

- Test edge cases with our JSON Formatter

Error #8: NaN and Infinity Values

Error message:

TypeError: Do not know how to serialize a BigInt

Invalid JSON: NaN is not allowed

What Causes It

JavaScript allows NaN, Infinity, and -Infinity as number values, but JSON does not. When you try to serialize these values, you get errors or unexpected results.

Visual Example

Before (Broken - JavaScript):

const data = {

division: 10 / 0, // Infinity

impossible: Math.sqrt(-1), // NaN

negative: -Infinity,

bigNumber: 9007199254740991n // BigInt

};

JSON.stringify(data);

Output (Broken JSON):

{"division":null,"impossible":null,"negative":null}

Note: BigInt throws an error, while NaN/Infinity become null.

After (Fixed - explicit handling):

const data = {

division: null, // Explicitly null

impossible: null,

negative: null,

bigNumber: "9007199254740991" // Convert BigInt to string

};

JSON.stringify(data);

Output (Valid JSON):

{"division":null,"impossible":null,"negative":null,"bigNumber":"9007199254740991"}

Why This Happens

Calculation results:

console.log(10 / 0); // Infinity

console.log(0 / 0); // NaN

console.log(Math.sqrt(-1)); // NaN

console.log(Number.MAX_VALUE * 2); // Infinity

JSON serialization behavior:

JSON.stringify({ value: Infinity }); // {"value":null}

JSON.stringify({ value: NaN }); // {"value":null}

JSON.stringify({ value: -Infinity }); // {"value":null}

JSON.stringify({ value: 10n }); // ❌ TypeError!

How to Fix It

Fix 1: Check before serializing

function sanitizeNumber(value) {

if (typeof value === 'bigint') {

return value.toString();

}

if (typeof value === 'number' && !isFinite(value)) {

return null; // or throw error, or use special string

}

return value;

}

const data = {

result: sanitizeNumber(10 / 0), // null

count: sanitizeNumber(42), // 42

bigId: sanitizeNumber(1234567890n) // "1234567890"

};

Fix 2: Use a custom JSON replacer

function jsonReplacer(key, value) {

// Handle BigInt

if (typeof value === 'bigint') {

return value.toString();

}

// Handle Infinity and NaN

if (typeof value === 'number') {

if (value === Infinity) return "Infinity";

if (value === -Infinity) return "-Infinity";

if (isNaN(value)) return "NaN";

}

return value;

}

const data = {

infinity: Infinity,

nan: NaN,

bigNum: 1234567890123456789n,

normal: 42

};

const json = JSON.stringify(data, jsonReplacer, 2);

console.log(json);

Output:

{

"infinity": "Infinity",

"nan": "NaN",

"bigNum": "1234567890123456789",

"normal": 42

}

Fix 3: Validate calculations before using results

function safeDivide(a, b) {

if (b === 0) {

return null; // Or throw error, or return 0

}

return a / b;

}

const result = safeDivide(10, 0); // null instead of Infinity

How to Prevent It

- Validate mathematical operations:

function performCalculation(a, b) {

const result = a / b;

if (!isFinite(result)) {

throw new Error(`Invalid calculation: ${a} / ${b} = ${result}`);

}

return result;

}

- Use defensive parsing:

function parseJsonSafely(jsonString) {

return JSON.parse(jsonString, (key, value) => {

// Convert string representations back to special values if needed

if (value === "Infinity") return Infinity;

if (value === "-Infinity") return -Infinity;

if (value === "NaN") return NaN;

return value;

});

}

- Set up TypeScript strict checks:

// tsconfig.json

{

"compilerOptions": {

"strict": true,

"noImplicitAny": true

}

}

- Test edge cases with our JSON Formatter

Error #9: Undefined Values

Error message:

undefined is not valid JSON

Property value undefined is not valid JSON

What Causes It

In JavaScript, undefined is a primitive value. In JSON, it doesn’t exist. When you try to serialize an object with undefined properties, they’re either omitted or cause errors.

Visual Example

Before (JavaScript object):

const user = {

name: "John",

age: undefined,

email: null

};

console.log(JSON.stringify(user));

Output (undefined is omitted):

{"name":"John","email":null}

Note: age property is completely removed!

Before (Array with undefined):

const data = [1, undefined, 3];

console.log(JSON.stringify(data));

Output (undefined becomes null in arrays):

[1,null,3]

The undefined vs null Difference

| Value | JSON Behavior | Use Case |

|---|---|---|

undefined |

Omitted from objects, becomes null in arrays |

Variable not initialized |

null |

Preserved as null |

Intentionally empty value |

Example:

const data = {

present: null, // "I know this is empty"

missing: undefined // "I don't know the value yet"

};

JSON.stringify(data); // {"present":null}

// 'missing' is completely gone!

How to Fix It

Fix 1: Replace undefined with null

const user = {

name: "John",

age: null, // Use null instead of undefined

email: null

};

JSON.stringify(user);

// {"name":"John","age":null,"email":null}

Fix 2: Use a replacer to handle undefined

function replacer(key, value) {

if (value === undefined) {

return null; // Convert undefined to null

}

return value;

}

const data = {

name: "John",

age: undefined,

email: "john@example.com"

};

JSON.stringify(data, replacer);

// {"name":"John","age":null,"email":"john@example.com"}

Fix 3: Remove undefined properties before serialization

function removeUndefined(obj) {

return Object.fromEntries(

Object.entries(obj).filter(([_, value]) => value !== undefined)

);

}

const user = {

name: "John",

age: undefined,

email: "john@example.com"

};

const cleaned = removeUndefined(user);

JSON.stringify(cleaned);

// {"name":"John","email":"john@example.com"}

Fix 4: Use default values

const user = {

name: "John",

age: userAge ?? null, // Nullish coalescing

email: userEmail || null, // Logical OR

phone: userPhone === undefined ? null : userPhone

};

How to Prevent It

- Initialize all properties explicitly:

// ❌ BAD - properties may be undefined

const user = {

name: userData.name,

age: userData.age,

email: userData.email

};

// ✅ GOOD - guarantee no undefined values

const user = {

name: userData.name ?? "Unknown",

age: userData.age ?? null,

email: userData.email ?? null

};

- Use TypeScript to enforce null over undefined:

interface User {

name: string;

age: number | null; // Force explicit null, not undefined

email: string | null;

}

const user: User = {

name: "John",

age: null,

email: null

};

- Validate before serialization:

function validateForJson(obj) {

for (const [key, value] of Object.entries(obj)) {

if (value === undefined) {

throw new Error(`Property "${key}" is undefined. Use null instead.`);

}

}

return obj;

}

const data = validateForJson({ name: "John", age: null });

JSON.stringify(data);

- Use our JSON Formatter to catch undefined value issues

Error #10: Circular References

Error message:

TypeError: Converting circular structure to JSON

TypeError: cyclic object value

What Causes It

When an object references itself directly or indirectly, creating an infinite loop. JSON cannot represent circular structures.

Visual Example

Before (Broken - direct circular reference):

const person = {

name: "John",

friend: null

};

person.friend = person; // person references itself

JSON.stringify(person); // ❌ TypeError: Converting circular structure to JSON

Before (Broken - indirect circular reference):

const john = { name: "John" };

const jane = { name: "Jane" };

john.friend = jane;

jane.friend = john; // Circular: john → jane → john

JSON.stringify(john); // ❌ TypeError!

After (Fixed - remove circular reference):

const john = { name: "John" };

const jane = { name: "Jane" };

john.friendId = "jane-123";

jane.friendId = "john-456";

JSON.stringify(john); // ✅ Works!

// {"name":"John","friendId":"jane-123"}

Real-World Examples

Example 1: DOM elements

const element = document.getElementById('myDiv');

// ❌ Fails - DOM elements have circular references

JSON.stringify(element);

// ✅ Extract only needed data

const elementData = {

tagName: element.tagName,

id: element.id,

className: element.className,

textContent: element.textContent

};

JSON.stringify(elementData);

Example 2: Parent-child relationships

class TreeNode {

constructor(value) {

this.value = value;

this.children = [];

this.parent = null; // Circular reference!

}

addChild(child) {

this.children.push(child);

child.parent = this; // Creates circular reference

}

}

const root = new TreeNode('root');

const child = new TreeNode('child');

root.addChild(child);

// ❌ Fails due to circular reference

JSON.stringify(root);

How to Fix It

Fix 1: Use JSON.stringify() with a circular reference handler

function getCircularReplacer() {

const seen = new WeakSet();

return (key, value) => {

if (typeof value === 'object' && value !== null) {

if (seen.has(value)) {

return '[Circular]'; // Replace circular reference

}

seen.add(value);

}

return value;

};

}

const john = { name: "John" };

const jane = { name: "Jane" };

john.friend = jane;

jane.friend = john;

const json = JSON.stringify(john, getCircularReplacer(), 2);

console.log(json);

Output:

{

"name": "John",

"friend": {

"name": "Jane",

"friend": "[Circular]"

}

}

Fix 2: Remove circular properties before serialization

function removeCircular(obj, seen = new WeakSet()) {

if (typeof obj !== 'object' || obj === null) {

return obj;

}

if (seen.has(obj)) {

return undefined; // Skip circular reference

}

seen.add(obj);

const result = Array.isArray(obj) ? [] : {};

for (const key in obj) {

result[key] = removeCircular(obj[key], seen);

}

return result;

}

const cleaned = removeCircular(circularObject);

JSON.stringify(cleaned);

Fix 3: Use IDs instead of references

// ❌ BAD - circular references

const john = { name: "John" };

const jane = { name: "Jane" };

john.friend = jane;

jane.friend = john;

// ✅ GOOD - use IDs

const users = {

"john-123": {

id: "john-123",

name: "John",

friendId: "jane-456"

},

"jane-456": {

id: "jane-456",

name: "Jane",

friendId: "john-123"

}

};

JSON.stringify(users); // Works perfectly!

Fix 4: Use specialized libraries

// Using flatted library

import { stringify, parse } from 'flatted';

const john = { name: "John" };

const jane = { name: "Jane" };

john.friend = jane;

jane.friend = john;

const json = stringify(john); // Handles circular references

const restored = parse(json); // Restores the structure

How to Prevent It

- Design data structures without circular references

- Use IDs to represent relationships instead of object references

- Normalize your data before serialization:

// Instead of nested objects, use normalized structure

const normalized = {

users: {

"user-1": { id: "user-1", name: "John", friendIds: ["user-2"] },

"user-2": { id: "user-2", name: "Jane", friendIds: ["user-1"] }

}

};

- Implement toJSON() method for custom objects:

class TreeNode {

constructor(value) {

this.value = value;

this.children = [];

this.parent = null;

}

toJSON() {

// Custom serialization without parent reference

return {

value: this.value,

children: this.children // Only include children, not parent

};

}

}

const root = new TreeNode('root');

const child = new TreeNode('child');

root.children.push(child);

child.parent = root;

JSON.stringify(root); // Works! toJSON() handles it

Error #11: Duplicate Keys in Objects

Error message:

Warning: Duplicate key 'name' in object

SyntaxError: Duplicate data property in object literal

What Causes It

Having the same key appear multiple times in a JSON object. While some parsers silently accept this and use the last value, it violates JSON best practices and can cause unpredictable behavior.

Visual Example

Before (Problematic):

{

"name": "John",

"age": 30,

"name": "Jane"

}

Parser behavior (last value wins):

const result = JSON.parse('{"name":"John","age":30,"name":"Jane"}');

console.log(result);

// {name: "Jane", age: 30}

// First "name" is silently overwritten!

After (Fixed):

{

"firstName": "John",

"lastName": "Jane",

"age": 30

}

Or if both values are needed:

{

"names": ["John", "Jane"],

"age": 30

}

Why This is Dangerous

Problem 1: Data loss

const userData = {

email: "old@example.com",

name: "John",

email: "new@example.com" // Overwrites first email silently

};

JSON.stringify(userData);

// {"name":"John","email":"new@example.com"}

// Lost old@example.com without warning!

Problem 2: Inconsistent parser behavior

// Some parsers throw errors

// Some use first value

// Some use last value

// Some merge values

// Result depends on the JSON parser used!

Problem 3: Security risks

{

"role": "user",

"permissions": ["read"],

"role": "admin"

}

If the parser uses the last value, a user just escalated their privileges!

How to Fix It

Fix 1: Rename duplicate keys

{

"personalEmail": "john@personal.com",

"workEmail": "john@work.com",

"phone": "+1234567890"

}

Fix 2: Use arrays for multiple values

{

"name": "John Doe",

"emails": [

"john@personal.com",

"john@work.com"

]

}

Fix 3: Use nested objects

{

"name": "John Doe",

"contact": {

"personal": {

"email": "john@personal.com",

"phone": "+1111111111"

},

"work": {

"email": "john@work.com",

"phone": "+2222222222"

}

}

}

Fix 4: Detect duplicates before serialization

function hasDuplicateKeys(obj) {

const keys = Object.keys(obj);

const uniqueKeys = new Set(keys);

return keys.length !== uniqueKeys.size;

}

const data = { name: "John", age: 30, name: "Jane" };

if (hasDuplicateKeys(data)) {

console.error("Duplicate keys detected!");

}

Fix 5: Validate JSON strings for duplicates

function checkDuplicateKeys(jsonString) {

const keyPattern = /"([^"]+)":/g;

const keys = [];

let match;

while ((match = keyPattern.exec(jsonString)) !== null) {

keys.push(match[1]);

}

const duplicates = keys.filter((key, index) => keys.indexOf(key) !== index);

if (duplicates.length > 0) {

console.warn('Duplicate keys found:', [...new Set(duplicates)]);

return false;

}

return true;

}

const json = '{"name":"John","age":30,"name":"Jane"}';

checkDuplicateKeys(json); // Warns about "name"

How to Prevent It

- Use JSON.stringify() which automatically handles duplicate keys (last value wins):

const obj = {};

obj.name = "John";

obj.age = 30;

obj.name = "Jane"; // Overwrites

JSON.stringify(obj); // {"name":"Jane","age":30}

- Use Map for dynamic keys:

const data = new Map();

data.set('name', 'John');

data.set('age', 30);

data.set('name', 'Jane'); // Overwrites, but explicitly

const obj = Object.fromEntries(data);

JSON.stringify(obj);

- Enable linting rules to catch duplicate keys:

// .eslintrc.js

{

"rules": {

"no-dupe-keys": "error"

}

}

- Validate with strict parsers:

// Use a strict JSON validator

function strictJsonParse(jsonString) {

// Check for duplicate keys before parsing

if (!checkDuplicateKeys(jsonString)) {

throw new Error('JSON contains duplicate keys');

}

return JSON.parse(jsonString);

}

- Test with our JSON Formatter which highlights duplicate keys

Error #12: Invalid Unicode Sequences

Error message:

SyntaxError: Invalid Unicode escape sequence

Malformed Unicode character escape sequence

What Causes It

JSON requires Unicode characters to be properly escaped using \uXXXX format (exactly 4 hexadecimal digits). Invalid escape sequences break parsing.

Visual Example

Before (Broken - invalid escape):

{

"message": "Hello \u00"

}

Missing digits in Unicode escape.

After (Fixed):

{

"message": "Hello \u0000"

}

Before (Broken - wrong format):

{

"emoji": "\u{1F600}"

}

ES6 Unicode format doesn’t work in JSON.

After (Fixed - use UTF-16 surrogate pairs):

{

"emoji": "\uD83D\uDE00"

}

Common Unicode Issues

Issue 1: Incomplete escape sequences

// ❌ WRONG - must be exactly 4 hex digits

{

"char": "\u00",

"char": "\u0",

"char": "\u"

}

// ✅ CORRECT

{

"null": "\u0000",

"tab": "\u0009",

"space": "\u0020"

}

Issue 2: ES6 Unicode escapes in JSON

// ✅ Valid in JavaScript

const js = { emoji: "\u{1F600}" };

// ❌ Invalid in JSON

const json = '{"emoji": "\\u{1F600}"}'; // Won't parse!

// ✅ Valid in JSON (use surrogate pairs)

const json = '{"emoji": "\\uD83D\\uDE00"}';

Issue 3: Emojis and special characters

{

"direct": "Hello 😀",

"escaped": "Hello \uD83D\uDE00"

}

Both are valid, but escaped version works everywhere.

How to Fix It

Fix 1: Use proper Unicode escape format

{

"copyright": "\u00A9",

"registered": "\u00AE",

"trademark": "\u2122",

"euro": "\u20AC",

"yen": "\u00A5"

}

Fix 2: Convert emojis to surrogate pairs

function emojiToSurrogatePair(emoji) {

const codePoint = emoji.codePointAt(0);

if (codePoint > 0xFFFF) {

const high = Math.floor((codePoint - 0x10000) / 0x400) + 0xD800;

const low = ((codePoint - 0x10000) % 0x400) + 0xDC00;

return `\\u${high.toString(16).toUpperCase()}\\u${low.toString(16).toUpperCase()}`;

}

return `\\u${codePoint.toString(16).padStart(4, '0').toUpperCase()}`;

}

console.log(emojiToSurrogatePair('😀')); // \uD83D\uDE00

console.log(emojiToSurrogatePair('©')); // \u00A9

Fix 3: Let JSON.stringify() handle encoding

const data = {

message: "Hello 😀 © ®",

symbols: "→ ← ↑ ↓"

};

const json = JSON.stringify(data);

// Automatically encodes special characters if needed

Fix 4: Validate Unicode escapes

function validateUnicodeEscapes(jsonString) {

// Check for incomplete \uXXXX sequences

const invalidEscape = /\\u(?![0-9a-fA-F]{4})/g;

if (invalidEscape.test(jsonString)) {

throw new Error('Invalid Unicode escape sequence detected');

}

return true;

}

const json = '{"char": "\\u00A9"}';

validateUnicodeEscapes(json); // Valid

const bad = '{"char": "\\u00A"}';

validateUnicodeEscapes(bad); // ❌ Throws error

Unicode Escape Reference

| Character | Escape Sequence | Description |

|---|---|---|

| Null | \u0000 |

Null character |

| Tab | \u0009 or \t |

Horizontal tab |

| Newline | \u000A or \n |

Line feed |

| Return | \u000D or \r |

Carriage return |

| Space | \u0020 |

Space |

| © | \u00A9 |

Copyright |

| ® | \u00AE |

Registered |

| € | \u20AC |

Euro sign |

| 😀 | \uD83D\uDE00 |

Grinning face emoji |

How to Prevent It

- Use native characters when possible:

{

"message": "© 2025 Company Name"

}

Instead of:

{

"message": "\u00A9 2025 Company Name"

}

- Let JSON.stringify() handle escaping:

const text = "Special chars: © ® ™ € 😀";

const json = JSON.stringify({ message: text });

// JSON.stringify() handles all escaping automatically

- Ensure UTF-8 encoding for JSON files:

// Node.js

const fs = require('fs');

fs.writeFileSync('data.json', JSON.stringify(data), 'utf-8');

// Specify encoding explicitly

- Test with international characters using our JSON Formatter

Error #13: Byte Order Mark (BOM) Issues

Error message:

SyntaxError: Unexpected token in JSON at position 0

Invalid character at start of JSON

What Causes It

A Byte Order Mark (BOM) is an invisible Unicode character (U+FEFF) that some text editors add to the beginning of files. JSON parsers don’t expect it and fail.

Visual Example

Before (Broken - with BOM):

{"name": "John"}

The character is invisible but breaks parsing!

After (Fixed - BOM removed):

{"name": "John"}

How to Detect BOM

Method 1: Hexadecimal view

# View file in hex

xxd data.json | head -n 1

# Without BOM:

00000000: 7b22 6e61 6d65 223a 2022 4a6f 686e 227d {"name": "John"}

# With BOM:

00000000: efbb bf7b 226e 616d 6522 3a20 224a 6f68 ...{"name": "Joh

^^^^^^^ BOM bytes

Method 2: JavaScript detection

function hasBOM(str) {

return str.charCodeAt(0) === 0xFEFF;

}

const fileContent = '{"name": "John"}';

console.log(hasBOM(fileContent)); // true

Method 3: Node.js file reading

const fs = require('fs');

const content = fs.readFileSync('data.json', 'utf-8');

if (content.charCodeAt(0) === 0xFEFF) {

console.log('File has BOM!');

const withoutBOM = content.slice(1);

JSON.parse(withoutBOM); // Works!

}

How to Fix It

Fix 1: Remove BOM before parsing

function removeBOM(str) {

if (str.charCodeAt(0) === 0xFEFF) {

return str.slice(1);

}

return str;

}

const jsonString = '{"name": "John"}';

const cleaned = removeBOM(jsonString);

const parsed = JSON.parse(cleaned); // Works!

Fix 2: Use strip-bom library (Node.js)

const stripBom = require('strip-bom');

const fs = require('fs');

const content = fs.readFileSync('data.json', 'utf-8');

const cleaned = stripBom(content);

const data = JSON.parse(cleaned);

Fix 3: Configure your text editor to save without BOM

VS Code:

// settings.json

{

"files.encoding": "utf8",

"files.autoGuessEncoding": false

}

Notepad++ (Windows):

- Encoding → Convert to UTF-8 without BOM

Sublime Text:

- File → Save with Encoding → UTF-8

Fix 4: Remove BOM from files using command line

# Linux/Mac

sed -i '1s/^\xEF\xBB\xBF//' data.json

# Or using PowerShell (Windows)

$content = Get-Content data.json -Raw

$content = $content.TrimStart([char]0xFEFF)

Set-Content data.json $content -NoNewline

How to Prevent It

- Configure your editor to save without BOM (UTF-8, not UTF-8-BOM)

- Use version control checks:

# Git pre-commit hook to detect BOM

if grep -r $'\xEF\xBB\xBF' *.json; then

echo "ERROR: JSON files contain BOM!"

exit 1

fi

- Always strip BOM when reading files:

// Create a safe JSON parse function

function parseJsonSafe(str) {

// Remove BOM if present

if (str.charCodeAt(0) === 0xFEFF) {

str = str.slice(1);

}

// Remove whitespace

str = str.trim();

return JSON.parse(str);

}

- Test files with our JSON Formatter which detects BOM issues

Error #14: Wrong File Encoding

Error message:

SyntaxError: Unexpected token � in JSON

Invalid or unexpected token

What Causes It

JSON files must be encoded in UTF-8. Other encodings (Latin-1, Windows-1252, UTF-16) can create invalid characters that break parsing.

Visual Example

Before (Broken - wrong encoding):

// File saved as Latin-1 (ISO-8859-1)

{"name": "Café"}

// Displays as: {"name": "Café"}

After (Fixed - UTF-8):

{"name": "Café"}

Common Encoding Issues

Issue 1: Non-ASCII characters corrupted

// File encoded as Windows-1252

const json = '{"message": "Hello™"}'; // Shows as "Helloâ„¢"

// Fixed: re-save as UTF-8

const json = '{"message": "Hello™"}'; // Correct

Issue 2: UTF-16 BOM confusion

// UTF-16 encoded file

þÿ{ " n a m e " : " J o h n " }

^spaces between every character

// Fixed: convert to UTF-8

{"name": "John"}

How to Fix It

Fix 1: Detect file encoding (Node.js)

const fs = require('fs');

const jschardet = require('jschardet');

// Read file as buffer

const buffer = fs.readFileSync('data.json');

// Detect encoding

const detected = jschardet.detect(buffer);

console.log('Detected encoding:', detected.encoding);

// Read with correct encoding

const content = buffer.toString(detected.encoding);

// Convert to UTF-8

const utf8Content = Buffer.from(content, detected.encoding).toString('utf-8');

fs.writeFileSync('data_utf8.json', utf8Content, 'utf-8');

Fix 2: Convert encoding using iconv

const iconv = require('iconv-lite');

const fs = require('fs');

// Read file

const buffer = fs.readFileSync('data.json');

// Convert from Latin-1 to UTF-8

const utf8Buffer = iconv.decode(buffer, 'latin1');

const utf8String = iconv.encode(utf8Buffer, 'utf8');

// Save as UTF-8

fs.writeFileSync('data_fixed.json', utf8String);

Fix 3: Command-line conversion

# Linux/Mac - convert using iconv

iconv -f ISO-8859-1 -t UTF-8 data.json > data_utf8.json

# Check file encoding

file -b --mime-encoding data.json

# Windows PowerShell

Get-Content data.json | Out-File -Encoding utf8 data_utf8.json

Fix 4: Fix corrupted characters

function fixEncodingIssues(str) {

// Common Latin-1 to UTF-8 mistakes

return str

.replace(/é/g, 'é') // é

.replace(/è/g, 'è') // è

.replace(/Ã /g, 'à') // à

.replace(/ç/g, 'ç') // ç

.replace(/â/g, 'â') // â

.replace(/â„¢/g, '™') // ™

.replace(/©/g, '©') // ©

.replace(/®/g, '®'); // ®

}

const broken = '{"name": "Café"}';

const fixed = fixEncodingIssues(broken);

// {"name": "Café"}

How to Prevent It

- Always save JSON files as UTF-8 without BOM

- Configure your editor:

VS Code:

{

"files.encoding": "utf8",

"[json]": {

"files.encoding": "utf8"

}

}

- Validate encoding in CI/CD:

# .github/workflows/validate.yml

- name: Check JSON encoding

run: |

find . -name "*.json" -exec file -b --mime-encoding {} \; | grep -v utf-8 && exit 1 || exit 0

- Use encoding-aware file readers:

// Always specify UTF-8 when reading

const fs = require('fs');

const content = fs.readFileSync('data.json', 'utf-8'); // Explicit encoding

// Or use promises

const content = await fs.promises.readFile('data.json', { encoding: 'utf-8' });

- Test with international characters using our JSON Formatter

Error #15: Exceeding Nested Depth Limits

Error message:

RangeError: Maximum call stack size exceeded

Error: JSON nested too deeply

Parser error: nesting depth exceeded

What Causes It

JSON parsers have maximum nesting depth limits (typically 100-1000 levels) to prevent stack overflow attacks. Deeply nested objects or arrays exceed this limit.

Visual Example

Before (Broken - too deeply nested):

{

"level1": {

"level2": {

"level3": {

... // 100 more levels

"level100": {

"data": "value"

}

}

}

}

}

After (Fixed - flattened structure):

{

"path": "level1.level2.level3.level100",

"data": "value"

}

Or use an array:

{

"levels": [

{"name": "level1"},

{"name": "level2"},

{"name": "level3"},

{"name": "level100", "data": "value"}

]

}

Parser Limits

| Parser | Default Depth Limit |

|---|---|

| Chrome/V8 | ~1000 |

| Firefox | ~1000 |

| Node.js | ~1000 |

| Python json | ~1000 |

| Java Jackson | ~1000 (configurable) |

| PHP json_decode | ~512 |

Real-World Causes

Cause 1: Recursive data structures

function generateDeepObject(depth) {

if (depth === 0) {

return { value: "deep" };

}

return { nested: generateDeepObject(depth - 1) };

}

const tooDeep = generateDeepObject(1000);

JSON.stringify(tooDeep); // ❌ RangeError!

Cause 2: Deeply nested API responses

{

"user": {

"profile": {

"settings": {

"preferences": {

"display": {

"theme": {

"colors": {

"primary": {

"shade": {

"light": "#fff"

}

}

}

}

}

}

}

}

}

}

Cause 3: Badly designed data transformations

// Accidentally creates deep nesting

function wrapData(data, depth) {

let result = data;

for (let i = 0; i < depth; i++) {

result = { wrapper: result };

}

return result;

}

const wrapped = wrapData({ value: 1 }, 1000);

JSON.stringify(wrapped); // ❌ Too deep!

How to Fix It

Fix 1: Flatten the structure

function flattenObject(obj, prefix = '') {

const flattened = {};

for (const [key, value] of Object.entries(obj)) {

const newKey = prefix ? `${prefix}.${key}` : key;

if (typeof value === 'object' && value !== null && !Array.isArray(value)) {

Object.assign(flattened, flattenObject(value, newKey));

} else {

flattened[newKey] = value;

}

}

return flattened;

}

const deep = {

a: {

b: {

c: {

d: "value"

}

}

}

};

const flat = flattenObject(deep);

// { "a.b.c.d": "value" }

JSON.stringify(flat); // Works!

Fix 2: Limit nesting depth

function limitDepth(obj, maxDepth, currentDepth = 0) {

if (currentDepth >= maxDepth) {

return "[Max depth reached]";

}

if (typeof obj !== 'object' || obj === null) {

return obj;

}

if (Array.isArray(obj)) {

return obj.map(item => limitDepth(item, maxDepth, currentDepth + 1));

}

const result = {};

for (const [key, value] of Object.entries(obj)) {

result[key] = limitDepth(value, maxDepth, currentDepth + 1);

}

return result;

}

const deep = generateDeepObject(1000);

const limited = limitDepth(deep, 10);

JSON.stringify(limited); // Works! Max 10 levels

Fix 3: Use arrays instead of nested objects

// ❌ DEEP nesting

{

"root": {

"child1": {

"child2": {

"child3": "value"

}

}

}

}

// ✅ FLAT array

{

"path": [

{"id": "root", "parentId": null},

{"id": "child1", "parentId": "root"},

{"id": "child2", "parentId": "child1"},

{"id": "child3", "parentId": "child2", "value": "value"}

]

}

Fix 4: Check depth before serialization

function getMaxDepth(obj, currentDepth = 0) {

if (typeof obj !== 'object' || obj === null) {

return currentDepth;

}

let maxDepth = currentDepth;

for (const value of Object.values(obj)) {

const depth = getMaxDepth(value, currentDepth + 1);

maxDepth = Math.max(maxDepth, depth);

}

return maxDepth;

}

const data = { a: { b: { c: { d: "value" } } } };

const depth = getMaxDepth(data);

console.log('Max depth:', depth); // 4

if (depth > 100) {

console.error('Data too deeply nested!');

}

How to Prevent It

- Design flat data structures from the start

- Normalize nested data:

// Instead of nested comments

{

"post": {

"comments": [

{

"text": "Comment 1",

"replies": [

{

"text": "Reply 1",

"replies": [...]

}

]

}

]

}

}

// Use normalized structure

{

"posts": [

{"id": "post-1", "title": "Post"}

],

"comments": [

{"id": "c1", "postId": "post-1", "parentId": null, "text": "Comment 1"},

{"id": "c2", "postId": "post-1", "parentId": "c1", "text": "Reply 1"}

]

}

- Validate depth in tests:

describe('JSON depth', () => {

it('should not exceed depth of 10', () => {

const depth = getMaxDepth(apiResponse);

expect(depth).toBeLessThan(10);

});

});

- Use our JSON Formatter to analyze structure depth

Best Practices for JSON Debugging

Now that you know the 15 common errors, here are proven strategies to debug JSON faster.

Practice 1: Use Proper Tools

Online validators:

- JSON Formatter - Instant validation, formatting, and error detection

- Diff Tool - Compare two JSON files to find differences

Command-line tools:

# Validate JSON syntax

python -m json.tool data.json

# Pretty-print JSON

cat data.json | python -m json.tool

# Using jq

jq . data.json # Validates and pretty-prints

# Node.js one-liner

node -e "console.log(JSON.stringify(JSON.parse(require('fs').readFileSync('data.json')), null, 2))"

Code editor extensions:

- VS Code: JSON Tools, Prettier

- Sublime Text: Pretty JSON

- IntelliJ IDEA: Built-in JSON validator

Practice 2: Add Comprehensive Error Handling

Basic try-catch:

function parseJsonSafe(jsonString) {

try {

return JSON.parse(jsonString);

} catch (error) {

console.error('JSON parse error:', error.message);

console.error('At position:', error.message.match(/position (\d+)/)?.[1]);

console.error('Problematic section:', jsonString.substring(

Math.max(0, error.message.match(/position (\d+)/)?.[1] - 20),

Math.min(jsonString.length, error.message.match(/position (\d+)/)?.[1] + 20)

));

return null;

}

}

Advanced error context:

function parseJsonWithContext(jsonString) {

try {

return { success: true, data: JSON.parse(jsonString) };

} catch (error) {

const position = parseInt(error.message.match(/position (\d+)/)?.[1] || '0');

const lines = jsonString.split('\n');

let currentPos = 0;

let lineNumber = 0;

let columnNumber = 0;

for (let i = 0; i < lines.length; i++) {

if (currentPos + lines[i].length >= position) {

lineNumber = i + 1;

columnNumber = position - currentPos;

break;

}

currentPos += lines[i].length + 1; // +1 for newline

}

return {

success: false,

error: error.message,

line: lineNumber,

column: columnNumber,

context: lines[lineNumber - 1],

suggestion: diagnoseSyntaxError(lines[lineNumber - 1], columnNumber)

};

}

}

function diagnoseSyntaxError(line, column) {

const char = line[column];

if (char === "'") return "Use double quotes (\") instead of single quotes (')";

if (char === ",") return "Remove trailing comma";

if (!char) return "Missing closing bracket or quote";

return "Check JSON syntax at this position";

}

Practice 3: Validate Before Production

Pre-commit hook:

#!/bin/bash

# .git/hooks/pre-commit

echo "Validating JSON files..."

for file in $(git diff --cached --name-only | grep -E '\.json$'); do

if ! python -m json.tool "$file" > /dev/null 2>&1; then

echo "❌ Invalid JSON: $file"

exit 1

fi

done

echo "✅ All JSON files are valid"

CI/CD validation:

# .github/workflows/validate.yml

name: Validate JSON

on: [push, pull_request]

jobs:

validate:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Validate JSON files

run: |

find . -name "*.json" -exec sh -c '

python -m json.tool "$1" > /dev/null || exit 1

' _ {} \;

Runtime validation:

const Ajv = require('ajv');

const ajv = new Ajv();

const schema = {

type: "object",

properties: {

name: { type: "string" },

age: { type: "number", minimum: 0 },

email: { type: "string", format: "email" }

},

required: ["name", "email"],

additionalProperties: false

};

const validate = ajv.compile(schema);

function validateUserData(data) {

const valid = validate(data);

if (!valid) {

console.error('Validation errors:', validate.errors);

return false;

}

return true;

}

Practice 4: Use JSON Schema

Define your data structure:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"title": "User",

"type": "object",

"properties": {

"id": {

"type": "integer",

"minimum": 1

},

"name": {

"type": "string",

"minLength": 1,

"maxLength": 100

},

"email": {

"type": "string",

"format": "email"

},

"role": {

"type": "string",

"enum": ["admin", "user", "guest"]

}

},

"required": ["id", "name", "email"]

}

Validate against schema:

const Ajv = require('ajv');

const ajv = new Ajv();

const schema = require('./user-schema.json');

const validate = ajv.compile(schema);

const userData = {

id: 1,

name: "John Doe",

email: "john@example.com",

role: "admin"

};

if (validate(userData)) {

console.log('✅ Valid!');

} else {

console.log('❌ Invalid:', validate.errors);

}

Practice 5: Log and Monitor JSON Errors

Structured logging:

function logJsonError(error, jsonString, context = {}) {

console.error({

timestamp: new Date().toISOString(),

error: 'JSON_PARSE_ERROR',

message: error.message,

position: error.message.match(/position (\d+)/)?.[1],

preview: jsonString.substring(0, 100),

context: context,

stack: error.stack

});

}

try {

JSON.parse(invalidJson);

} catch (error) {

logJsonError(error, invalidJson, { source: 'API', endpoint: '/user/123' });

}

Monitor JSON errors in production:

// Using Sentry or similar

const Sentry = require('@sentry/node');

function parseJsonWithMonitoring(jsonString, context) {

try {

return JSON.parse(jsonString);

} catch (error) {

Sentry.captureException(error, {

extra: {

jsonPreview: jsonString.substring(0, 500),

context: context

},

tags: {

error_type: 'json_parse'

}

});

throw error;

}

}

Tools and Resources

Essential Developer Tools

JSON Processing:

- JSON Formatter - Validate, format, and fix JSON syntax

- JSON Diff - Compare two JSON files or objects

- JSON Converter - Convert between JSON, XML, YAML, and other formats

String and Data Tools:

- String Case Converter - Convert JSON keys between naming conventions

- Encoder/Decoder - Base64 encode/decode JSON data

- Hash Generator - Generate checksums for JSON files

Development Tools:

- UUID Generator - Generate unique IDs for JSON data

- Random String Generator - Create test data

- Checksum Calculator - Verify JSON file integrity

API Testing:

- HTTP Headers Checker - Inspect API Content-Type headers

- JWT Decoder - Decode JSON Web Tokens

View all tools: Developer Tools

Learning Resources

Official Specifications:

- JSON.org - Official JSON specification

- RFC 8259 - The JSON Data Interchange Format

- ECMA-404 - JSON standard

API Documentation:

- MDN: JSON - JavaScript JSON reference

- JSON Schema - Validation and documentation

Related Articles:

- 50 JSON API Response Examples - Real-world JSON patterns

- JSON vs XML vs YAML - Choosing data formats

- How to Format JSON Online - Quick formatting guide

Conclusion: Master JSON Debugging

You’ve now learned the 15 most common JSON errors and exactly how to fix them:

- Unexpected tokens - Check for HTML responses and undefined values

- Unterminated strings - Close all quotes and escape internal quotes

- Missing commas - Add commas between properties (except the last)

- Trailing commas - Remove commas after the last item

- Single quotes - Always use double quotes

- Unescaped characters - Escape special characters in strings

- Invalid numbers - Follow strict number format rules

- NaN/Infinity - Replace with null or strings

- Undefined values - Use null instead

- Circular references - Use IDs instead of object references

- Duplicate keys - Rename or use arrays

- Invalid Unicode - Use proper

\uXXXXescape sequences - BOM issues - Remove byte order marks

- Wrong encoding - Save as UTF-8

- Deep nesting - Flatten structures or limit depth

Your JSON Debugging Checklist

Before deploying JSON:

- ✅ Validate with a JSON parser

- ✅ Check for trailing commas

- ✅ Verify all quotes are double quotes

- ✅ Ensure UTF-8 encoding without BOM

- ✅ Test with special characters and Unicode

- ✅ Validate nesting depth is reasonable

- ✅ Use JSON.stringify() instead of manual strings

- ✅ Add try-catch error handling

- ✅ Test with our JSON Formatter

When debugging JSON errors:

- Read the error message carefully - it tells you the position

- Look at the context around the error position

- Check for common mistakes (quotes, commas, escape sequences)

- Use a validator to get better error messages

- Compare with working examples

- Test incrementally to isolate the problem

Next Steps

- Bookmark our JSON Formatter for instant validation

- Set up linting in your code editor to catch errors early

- Implement error handling in all JSON parsing code

- Add validation to your API endpoints

- Share this guide with your team to prevent common mistakes

Found this helpful? Share it with your team and bookmark our developer tools for your next project.

Still stuck on a JSON error? Contact us or visit our FAQ for more help.

Last Updated: November 1, 2025

Reading Time: 16 minutes

Author: Orbit2x Team